One of the cool features in Azure LogAnalytics is the capability to do data-transformation before the data enters your LogAnalytics workspaces.

If you want to see my latest blog-series of articles about ‘mastering Azure logging’, please check the overview here

If you want to see my latest tutorial on setting up data transformation, please check it out here

I use this feature to normalize data and enrich data for Microsoft Sentinel environments and optimize costs by removing “noise” in logs. I have also used it to temporary stop millions of unnecessary log events caused by application errors or misconfigurations. Then I could investigate and fix the root cause of the issue.

To summarize, this feature can help your with:

| Category | Details |

| Remove sensitive data | You may have a data source that sends information you don’t want stored for privacy or compliancy reasons. Filter sensitive information. Filter out entire rows or just particular columns that contain sensitive information. Obfuscate sensitive information. For example, you might replace digits with a common character in an IP address or telephone number. |

| Enrich data with additional or calculated information | Use a transformation to add information to data that provides business context or simplifies querying the data later. Add a column with additional information. For example, you might add a column identifying whether an IP address in another column is internal or external. Add business specific information. For example, you might add a column indicating a company division based on location information in other columns. |

| Reduce data costs | Since you’re charged ingestion cost for any data sent to a Log Analytics workspace, you want to filter out any data that you don’t require to reduce your costs. Remove entire rows. For example, you might have a diagnostic setting to collect resource logs from a particular resource but not require all of the log entries that it generates. Create a transformation that filters out records that match a certain criteria. Remove a column from each row. For example, your data may include columns with data that’s redundant or has minimal value. Create a transformation that filters out columns that aren’t required. Parse important data from a column. You may have a table with valuable data buried in a particular column. Use a transformation to parse the valuable data into a new column and remove the original. Examples of where data-transformation is useful: We want to remove specific security-events from a server, which are making lots of ”noise” in our logs due to a misconfiguration or error and it is impossible to fix itWe want to remove security events, which we might show with a high amount, but we want to filter it out like kerberos computer-logon traffic. |

| Disclaimer about security & data-transformation From a security hunting perspective, it is important to note, that you should be very careful with this, as you might remove data which will make security hunting harder – or even impossible if vital data is removed or table structure is altered. Please do proper testing and be aware of the consequences. |

Supported ways of doing data-transformation

Currently, there are support for doing data-transformation using 4 methods:

- XPath filtering using Azure Monitor Agent

- Azure Monitor Agent (AMA) using TransformKql in Data Collection Rule (DCR)

- Custom application sending data using logs ingestion API via DCR

- Workspace transformation DCR

This blog will go deep-dive on method 2; ’Azure Monitor Agent (AMA) using TransformKql in Data Collection Rule (DCR)’. I will make another post on method 4 soon.

Before going into configurering data-transformation, I want to touch on the ”old” method using XPath (XML Path Language).

Transformation using XPath

Since AMA was launched in June 2021, it has supported doing transformation with XPath. This methods works fine and has helped my customers to save money.

Microsoft asked me to provide a statement about this in the officiel go-live announcement, when Azure Monitor Agent went GA in June 2021 (https://techcommunity.microsoft.com/t5/azure-observability-blog/a-powerful-agent-for-azure-monitor-and-a-simpler-world-of-data/ba-p/2443285).

Here is an example of using XPath where I am collecting all security events, excluding any computer-related security-events like Computer Kerberos logon-traffic

"Security!*[System[(band(Keywords,13510798882111488))]] and *[(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE01$']) and (EventData[Data[@Name='TargetUserName'] != 'EXCHANGE02$']) and (EventData[Data[@Name='TargetUserName'] != 'EXCHANGE03$']) and (EventData[Data[@Name='TargetUserName'] != 'EXCHANGE04$']) and (EventData[Data[@Name='TargetUserName'] != 'EXCHANGE05$']) ]"AMA is still supporting XPath, but I recommend to use data transformation using DCR-rules, as it solves some of the limitations in the XPath implementation in AMA.

XPath is a standard, currently at 3.1 (2017), but Microsoft chose to implement XPath v1 in AMA from 1999. V1 doesn’t support filtering with like or contains. Therefore it is hard to scale using xpath v1, if you need advanced features.

In the sample above, I had to explicitly define the server-names where I wanted to remove computer-related security events.

How does transformation work using AMA & DCR ?

The main difference between XPath and data-transformation using DCR is where it runs, as XPath filtering is done on the endpoint, whereas data-transformation using a DCR runs in the Azure backend in a dedicated pipeline per DCR – being applied on each row of data coming in – before data is sent into Loganalytics.

Before going into the details on how to create a data-transformation in data collection rules, we will recap the principles about Data Collection Rules (DCRs) and data transformations.

Any resource using AMA is always collecting the union of all DCRs.

This way multiple teams / roles can enable what they need, without impacting other teams. If Microsoft switched the behavior, one team could disable other collection by accident.

Therefore a transformation will only impact that particular workspace where the transformation applies.

If you – by mistake – have 2 DCRs for SecurityEvent collection and use one of them for transformation, then you will end up having double of the events (except for the filtered away events).

As mentioned earlier, AMA will union all DCRs, so it is important, that you have a good overview of the current associated DCRs associated with the endpoints. Which data is being collected into which tables ?

Implementation of Data Collection using Data Collection Rules (DCRs)

This blog will not go into details on how to configure a data collection rule, but for reading purpose I will touch on this high-level.

Normally, I’m configuring a set of default DCRs that are being applied to all servers, both native Azure VMs and Azure Arc-enabled servers – for example:

- Collection of VMInsights (using Dependency Agent with AMA-support)

- Collection of System and Application event log

- Collection of Security event log

Targeting your DCRs

After defining the DCRs, you need to associate the DCRs to your resources. This can be done in multiple ways:

- Azure Policy and Tags – for example defining a tag.

- Scripts – for example using Powershell ’New-AzDataCollectionRuleAssociation’

- Terraform

Pick your choice depending on how you want to do this 😊

How to transform your data using TransformKql in a DCR using AMA

The process to create a separate DCR with data transformation is done in 7 steps:

- Kusto command must be defined

- Deploy a new DCR using Azure Monitor – or via Sentinel AMA-connector if transformation is for SecurityEvents

- Adding the TransformKql to the DCR

- Updating/uploading the modified DCR

- Verification of changes in DCR

- Assign the DCR to the machine(s) where the transformation must happend

- Wait for the DCR and transformation pipeline to kick in

Step 1: Kusto command must be defined

Typically, I start by defining the Kusto command to show all data; except the data we want to filter away.

Below are 4 samples

| SecurityEvent | where EventID != 5145 | Here I want to see all Security event except for EventID = 5145 |

| SecurityEvent | where (EventID != 8002) and (EventID != 5058) and (EventID != 4662) | Here I want to see all Security events except for EventID = 8002,5058,4662 |

| Event | where ( (EventID != 10016 and EventLog == “Application”) ) | Here I want to see all Event system and application events, except for application events with eventid 10016 |

| CommonSecurityLog | where (DeviceVendor !contains “sonicwall”) or ((DeviceVendor contains “sonicwall”) and (Activity contains “connection opened” or Activity contains “connection closed”) and (Protocol != “udp/dns”)) | Here I want to see all CEF/syslog where devicevendor is different from sonicwall like Cisco and all sonicwall events, except if protocol is udp/dns |

Note:

- Since the transformation is applied to each record individually, it can’t use any KQL operators that act on multiple records. Only operators that take a single row as input and return no more than one row are supported. For example, summarize isn’t supported since it summarizes multiple records. See Supported KQL features for a complete list of supported features.

- You cannot use cross-workspace references

- See this link for more information (https://learn.microsoft.com/en-us/azure/azure-monitor/essentials/data-collection-transformations-structure)

When the Kusto command is working as expected, then copy the Kusto query into Notepad and change the tablename to source – below I changed SecurityEvent with source

| source | where EventID != 5145 |

Lastly we need to change the Kusto query, so it will be applied as one line with escape characters

| source\n| where EventID != 5145 |

Tip

In case you are working with advanced queries, I take my Kusto query and paste it in an online converter, which will create everything with escape characters.

Personally, I use the online website https://jsonformatter.org/json-escape

| Transformation query (Kusto) | Transformation query for transformKql |

| source | where (Account != “WINSFTP\\autotest”) and (EventID != 4688 and EventID != 8002 and EventID != 4625) and (Account != “WORKGROUP\\WINSFTP$”) | source\r\n| where (Account != \”CVT-WINSFTP\\\\cvtautotest\”) and (EventID != 4688 and EventID != 8002 and EventID != 4625) and (Account != \”WORKGROUP\\\\CVT-WINSFTP$\”) |

Step 2: Deploy a new DCR

Configure a standard DCR rule and choose to collect what you want like all security events or all system and application events.

Make a note of the ResourceId of the DCR rule. You will use the ResourceId in step 3

Step 3: Adding the TransformKql to the DCR

I use a Powershell script to retrieve the DCR into a flat JSON-file; use Notepad++ to edit the file and add the needed transformation – and then upload the modified DCR again. Below I have created a sample folder C:\TMP where the file will be stored

The script can be found here on my github

Start by setting the variables $ResourceId and $FilePath

ResourceId was noted in the previous step 2

FilePath can be any path – it is only a temporary file used for this change – example

####################################################

# VARIABLES

####################################################

# here you put the ResourceID of the Data Collection Rules (a sample is provided below)

$ResourceId = "/subscriptions/xxxxxx/resourceGroups/rg-logworkspaces/providers/microsoft.insights/dataCollectionRules/dcr-ingest-exclude-security-eventid"

# here you put a path and file name where you want to store the temporary file-extract from DCR (a sample is provided below)

$FilePath = "c:\tmp\dcr-ingest-exclude-security-eventid.txt"

Connect to Azure

####################################################

# CONNECT TO AZURE

####################################################

Connect-AzAccountRun the export DCR

$DCR = Invoke-AzRestMethod -Path ("$ResourceId"+"?api-version=2021-09-01-preview") -Method GET

$DCR.Content | ConvertFrom-Json | ConvertTo-Json -Depth 20 | Out-File -FilePath $FilePathNow you have a JSON file in c:\tmp folder

Modify file and add TransformKql

Open the file using Notepad++ and add the line transformKql command that you created in step 1

"transformKql": "source\n| where (EventID != 5145)"

NOTE: Remember to add the , (comma) on the line before so you are not breaking the JSON structure.

Step 4: Update the DCR (Uploading the changed DCR from the file)

Now we want to run the last part of the powershell script, which will update the DCR taking the entire content of the local file and making a REST api call using a specific api version (2021-09-01-preview). TransformKql requires a specific api-version (2021-09-01-preview), so we need to communicate with that particular api-set using REST api.

####################################################

# UPLOAD FILE / UPDATE DCR WITH TRANSFORM

####################################################

$DCRContent = Get-Content $FilePath -Raw

Invoke-AzRestMethod -Path ("$ResourceId"+"?api-version=2021-09-01-preview") -Method PUT -Payload $DCRContentYou should be getting a StatusCode 200 with the PUT commmand, indicating everything it updated correctly

If there is is an error in the file structure, you will get an error 400

Step 5: Verification of changes in DCR

If you go and check the GUI for the changes, please remember to choose the correct API in the drop-down menu. Otherwise you wont be able to see the changes. You will now see the transformKql.

You can also extract the changes running the first lines again to extract into the local file using the GET command

####################################################

# EXPORT EXISTING DCR TO FILE

####################################################

$DCR = Invoke-AzRestMethod -Path ("$ResourceId"+"?api-version=2021-09-01-preview") -Method GET

$DCR.Content | ConvertFrom-Json | ConvertTo-Json -Depth 20 | Out-File -FilePath $FilePathStep 6: Associate the DCR rule to the machine(s)

Now you have to associate the DCR rule to the machine(s) where you want the transformation to happend.

Step 7: Wait for the DCR and transformation pipeline to kick in

Now we have to wait for pipeline transformation to happend. Normally it wil start within 5-10 minutes, but I have seen changes taking 30 min.

Troubleshooting

If you want to verify, that the local Azure Monitor Agent has received the DCR rule, this can be done using the local AMA files. AMA will check every 5 min for new DCR changes.

Verification using local AMA config files

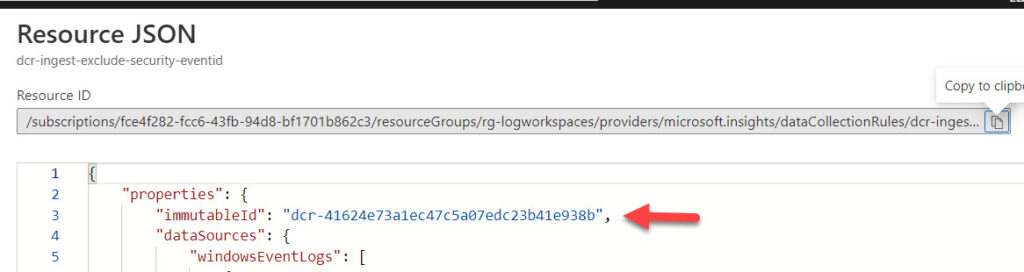

Open your DCR rule in JSON view and note the ImmuntableId of your DCR rule

Open the file C:\WindowsAzure\Resources\AMADataStore.DC1\mcs\mcsconfig.latest.xml (or mcsconfig.latest.json).

Search for the ImmuntableId. You should now see that it is using the new DCR rule.

If AMA is not receiving the DCR rule, please verify that you have linked the DCR rule to the server. Otherwise continue to the AMA troubleshooting below.

Azure Monitor Agent troubleshooting

Azure Monitor Agent has a troubleshooting Powershell-script, which might be able to help you.

Run the following Powershell script:

C:\Packages\Plugins\Microsoft.Azure.Monitor.AzureMonitorWindowsAgent\<latest version on your computer>\CollectAMALogs.ps1It will generate a zip-file on your desktop with all the log-files.

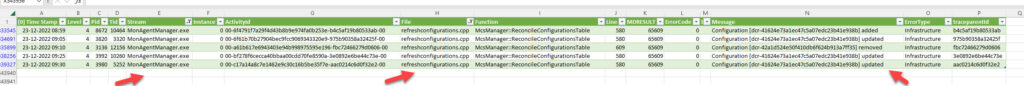

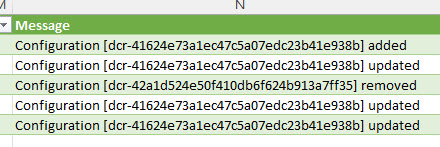

Open the file maeventtable.csv in Excel and filter

- Stream = MonAgentManager.exe

- File = refreshconfigurations.cpp

You should now be able to see the DCR changes

More resources about data transformation

- https://learn.microsoft.com/en-us/azure/azure-monitor/essentials/data-collection-transformations

- https://learn.microsoft.com/en-us/azure/azure-monitor/essentials/data-collection-rule-structure

3 thoughts on “How to do data transformation with Azure LogAnalytics – to enrich information, optimize cost, remove sensitive data?”