Today, we use logging for many purposes including security hunting with SIEM (Sentinel), troubleshooting, performance telemetry, compliance reporting – but it can also be very costly. In my blog below, I will show you how I reduced my log-costs with 89% of the costs (ingestion & query) – with 96% cost reduction on log ingestion alone.

As mentioned, one of the challenges, I meet is the costs with the Analytics plan in LogAnalytics for some use-cases with high ingestion and low retention needs. Recently, Microsoft released the newest in class; Auxiliary plan, which I find fits perfectly into many scenarios. This blog will cover cost comparisons, implementation and use-cases.

Content

- Use-cases for Auxiliary logs

- Can I switch my Syslog or SecurityEvent table to Auxiliary logs ?

- Feature Comparison of Plans

- Cost Comparison of Plans

- Implementation of Auxiliary plan

- Public Preview limitations

- More Info

Use-cases for Auxiliary logs

Auxiliary logs can be used to collect any custom source of data that will be sent into custom logs using Data Collection Rule. All data can be queried using Kusto. Data can be queried interactively for 30 days and using search-jobs can be queried up to 12 years.

Use-cases includes high ingestion (verbose-logging) cases like storage access logs, NetFlow logs, proxy logs, IoT logs, Firewall logs, CSV-files, TXT-files, etc.

This can also include compliance use-cases where we need to store the logs for long-term usage.

See comparison and detailed feature overview later in the blog.

Can I switch my Syslog or SecurityEvent table to Auxiliary logs ?

Currently, standard streams like Microsoft-CommonSecurityLog (Syslog/CEF), Microsoft-SecurityEvent (Windows Security Logs) and Microsoft-ServiceMap (VM Insights) are not supported to be stored in Auxiliary plan.

They require to be stored in Analytics plans. The advantage of this is, that Microsoft maintains the schema of the table, which will ensure hunting capabilities in Sentinel can continue to evolve over time when Microsoft continues to enrich the data.

Feature Comparison of Plans

| Analytics plan | Basic plan | Auxiliary plan | |

| Retention (short-term) | 31 days (90 days with Sentinel) | 30 days | 30 days |

| Retention (long-term) | 12 years | 12 years | 12 years |

| Query (interactive) | Up to 2 years | 30 days | 30 days |

| Query older data | Search jobs | Search jobs | Search jobs |

| Performance (interactive) | High | High | Medium (10-20% slower) |

| Query | Fast query performance with full KQL support | Fast query performance with full KQL on a single table and lookup to Analytics tables | Full KQL on a single table and lookup to Analytics tables |

| Log Ingestion Cost | $2.99 per GB | $0.65 per GB | $0.13 per GB |

| Query Cost | Included | $0.0065 per GB of data scanned | $0.0065 per GB of data scanned |

| Sentinel Query Support Query cost will apply per query for Basic & Auxiliary plans | Included | Included | Included |

| Summary Rules support | Included | Included | Included |

| Alerting capability Query cost will apply per query for Basic & Auxiliary plans Azure Monitor cost will apply for all alerts. | Included | Included | Included |

Cost Comparison of Plans

Below you will find the comparison of the costs between LogAnalytics and Auxiliary plan. as you can see, I save 89% using Auxiliary plan compared with Analytics plan (based on the assumptions).

| Plan | Action | Amount (Gb) | Days/Month | USD/Gb | USD/Month | Savings % (compared to LogAnalytics plan) |

| ANALYTICS | Ingestion | 100 | 30,7 | 2,99 | 9179 | |

| Query | 0 | |||||

| TOTAL (USD/Month) | 9179 | |||||

| BASIC | Ingestion | 100 | 30,7 | 0,65 | 1996 | |

| Query # of queries Gb scanned per query | 100 1000 | 0,0065 | 650 | |||

| TOTAL (USD/Month) | 2646 | 72% | ||||

| AUXILIARY | Ingestion | 100 | 30,7 | 0,13 | 399 | 96% |

| Query # of queries Gb scanned per query | 100 1000 | 0,0065 | 650 | |||

| TOTAL (USD/Month) | 1049 | 89% |

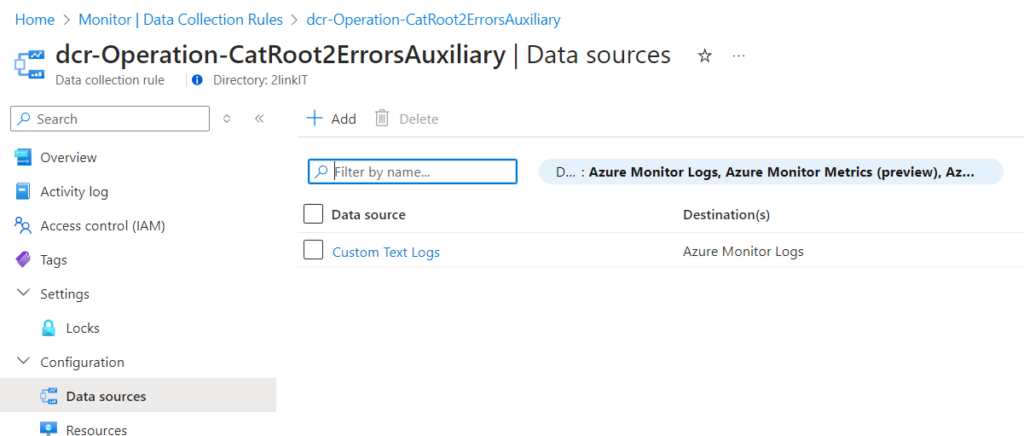

Implementation of Auxiliary Logs

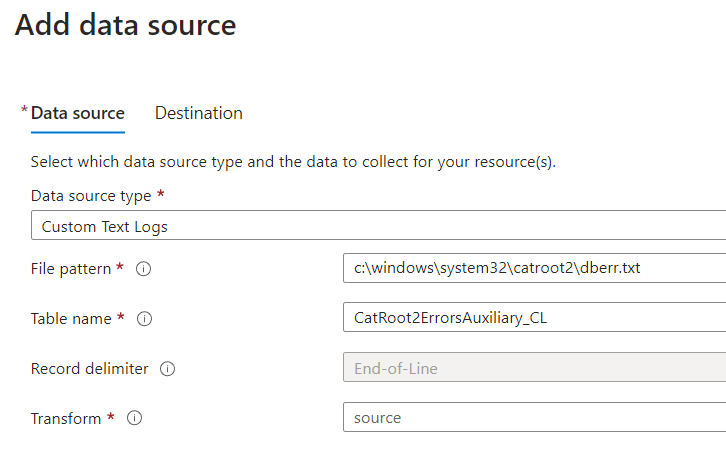

In this blog, I have documented, how we can collect the file DBError.txt in the folder C:\Windows\System32\CatRoot2 and look for any Error -1811, which indicates the server was impacted by the August 2024 Windows Update issue covered in this article. The data will be stored in Auxiliary table.

The sample involves collection of content from a TXT file so the steps to send data into Auxiliary table are:

- Deploy a Data Collection Endpoint in the region where the LogAnalytics workspace is placed.

- Create the Auxiliary table with required schema

- Create the DCR

- Modify the DCR to extend with 2 extra properties so we know from which VM the data in the TXT-file is coming from

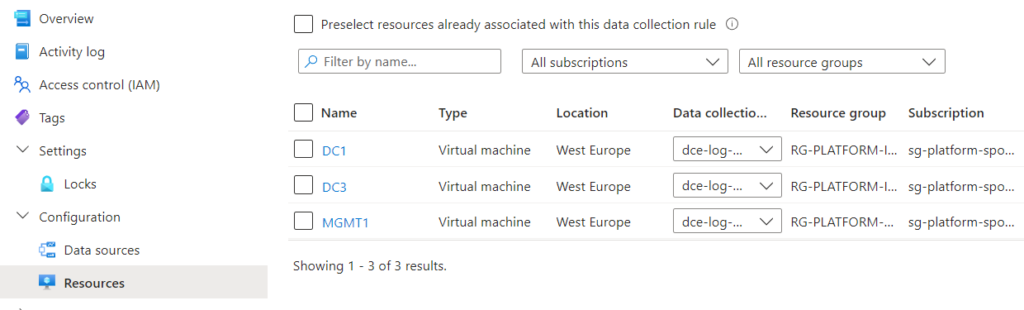

- Send data in using Log Ingestion API – or by associating the DCR to VMs in Azure.

Detailed steps

1. Deploy a Data Collection Endpoint in the region where the LogAnalytics workspace is placed.

See this article for instructions on how to create a Data Collection Endpoint.

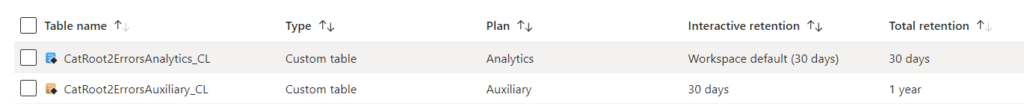

2. Pre-create the Auxiliary table with required schema.

- In this sample, I create the table CatRoot2ErrorsAuxiliary_CL

# Connect to Azure

Connect-AzAccount

# Create table CatRoot2ErrorsAuxiliaryV2_CL

$PayLoad = @{

properties = @{

totalRetentionInDays = 365

plan = "Auxiliary"

schema = @{

name = "CatRoot2ErrorsAuxiliaryV2_CL"

columns = @(

@{

name = "TimeGenerated"

type = "DateTime"

}

@{

name = "RawData"

type = "String"

}

@{

name = "Computer"

type = "String"

}

)

}

}

}

$PayLoadJson = $PayLoad | ConvertTo-Json -Depth 20

$URI = "/subscriptions/xxxxxx/resourcegroups/rg-loganalytics/providers/microsoft.operationalinsights/workspaces/log-platform-management-errors-p/tables/CatRoot2ErrorsAuxiliaryV2_CL?api-version=2023-01-01-preview"

Invoke-AzRestMethod -Path $URI -Method PUT -Payload $PayLoadJsonNote the structure in Auxiliary plan is almost the same as an Analytics Plan. Only difference is shown below

totalRetentionInDays = 365

plan = "Auxiliary"3. Setup the collection of data – either using Azure Monitor Agent or Log Ingestion API.

Below is a sample of collecting a text log-file. It is same method using Auxiliary and Analytics

4. Modify the DCR to extend the collection with Computer

Once you have created the DCR, we can see in the JSON format the below structure, but we need to add the built-in property: Computer

{

"properties": {

"immutableId": "dcr-e1fxxxxxxxx",

"dataCollectionEndpointId": "/subscriptions/xxxxxxxx-3f904de63b79/resourceGroups/rg-loganalytics/providers/Microsoft.Insights/dataCollectionEndpoints/dce-log-platform-management-operation-neu-p",

"streamDeclarations": {

"Custom-Text-CatRoot2ErrorsAuxiliary_CL": {

"columns": [

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "RawData",

"type": "string"

}

]

}

},

"dataSources": {

"logFiles": [

{

"streams": [

"Custom-Text-CatRoot2ErrorsAuxiliary_CL"

],

"filePatterns": [

"c:\\windows\\system32\\catroot2\\dberr.txt"

],

"format": "text",

"settings": {

"text": {

"recordStartTimestampFormat": "ISO 8601"

}

},

"name": "Custom-Text-CatRoot2ErrorsAuxili"

}

]

},

"destinations": {

"logAnalytics": [

{

"workspaceResourceId": "/subscriptions/xxxxxx-3f904de63b79/resourceGroups/rg-loganalytics/providers/Microsoft.OperationalInsights/workspaces/log-platform-management-errors-p",

"workspaceId": "xxxxx",

"name": "la-2145994651"

}

]

},

"dataFlows": [

{

"streams": [

"Custom-Text-CatRoot2ErrorsAuxiliary_CL"

],

"destinations": [

"la-2145994651"

],

"outputStream": "Custom-CatRoot2ErrorsAuxiliary_CL"

}

],

"provisioningState": "Succeeded"

},

"location": "northeurope",

"tags": {},

"kind": "Windows",

"id": "/subscriptions/xxxxxxxxx/resourceGroups/rg-loganalytics/providers/Microsoft.Insights/dataCollectionRules/dcr-Operation-CatRoot2ErrorsAuxiliary",

"name": "dcr-Operation-CatRoot2ErrorsAuxiliary",

"type": "Microsoft.Insights/dataCollectionRules",

"etag": "\"5a00e210-0000-0c00-0000-66e0bce80000\"",

"systemData": {

"createdBy": "mok@2linkit.net",

"createdByType": "User",

"createdAt": "2024-09-10T21:28:42.806711Z",

"lastModifiedBy": "mok@2linkit.net",

"lastModifiedByType": "User",

"lastModifiedAt": "2024-09-10T21:40:55.2792351Z"

}

}In order to add the 2 properties, we will do the following 3 steps:

- Retrieve the entire DCR using REST API (GET) in JSON format – and save it to a TXT file

- Edit the file – and add the property Computer parameter in the streamDeclarations section

- Upload the entire file content using REST API (PUT)

4.1 Get the DCR – save to local JSON file

# here you put the ResourceID of the Data Collection Rules (a sample is provided below)

$ResourceId = "/subscriptions/7e867037-59b5-4edc-97ee-3f904de63b79/resourceGroups/rg-loganalytics/providers/Microsoft.Insights/dataCollectionRules/dcr-Operation-CatRoot2ErrorsAuxiliary"

# here you put a path and file name where you want to store the temporary file-extract from DCR (a sample is provided below)

$FilePath = "c:\tmp\dcr-export.txt"

####################################################

# Get DCR

####################################################

$DCR = Invoke-AzRestMethod -Path ("$ResourceId"+"?api-version=2022-06-01") -Method GET

$DCR.Content | ConvertFrom-Json | ConvertTo-Json -Depth 20 | Out-File -FilePath $FilePath4.2 Add these lines in the streamDeclarations section

"streamDeclarations": {

"Custom-Text-CatRoot2ErrorsAuxiliary_CL": {

"columns": [

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "RawData",

"type": "string"

},

{

"name": "Computer",

"type": "string"

}

]

}

},

Don’t forget the , (comma) after the prior } so the syntax of the JSON file doesn’t break !

4.3 Upload the modified JSON and overwrite DCR using PUT REST Api

####################################################

# UPLOAD FILE / UPDATE DCR

####################################################

$DCRContent = Get-Content $FilePath -Raw

Invoke-AzRestMethod -Path ("$ResourceId"+"?api-version=2022-06-01") -Method PUT -Payload $DCRContentYour DCR will now look like this in JSON view

{

"properties": {

"immutableId": "dcr-exxxxxxxxxf50",

"dataCollectionEndpointId": "/subscriptions/xxxxxxxxxxx3b79/resourceGroups/rg-loganalytics/providers/Microsoft.Insights/dataCollectionEndpoints/dce-log-platform-management-operation-neu-p",

"streamDeclarations": {

"Custom-Text-CatRoot2ErrorsAuxiliary_CL": {

"columns": [

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "RawData",

"type": "string"

},

{

"name": "Computer",

"type": "string"

}

]

}

},

"dataSources": {

"logFiles": [

{

"streams": [

"Custom-Text-CatRoot2ErrorsAuxiliary_CL"

],

"filePatterns": [

"c:\\windows\\system32\\catroot2\\dberr.txt"

],

"format": "text",

"settings": {

"text": {

"recordStartTimestampFormat": "ISO 8601"

}

},

"name": "Custom-Text-CatRoot2ErrorsAuxili"

}

]

},

"destinations": {

"logAnalytics": [

{

"workspaceResourceId": "/subscriptions/xxxxxxxxxb79/resourceGroups/rg-loganalytics/providers/Microsoft.OperationalInsights/workspaces/log-platform-management-errors-p",

"workspaceId": "58c7aa3e-a321-4fdb-99cf-d1096b0f0251",

"name": "la-2145994651"

}

]

},

"dataFlows": [

{

"streams": [

"Custom-Text-CatRoot2ErrorsAuxiliary_CL"

],

"destinations": [

"la-2145994651"

],

"outputStream": "Custom-CatRoot2ErrorsAuxiliary_CL"

}

],

"provisioningState": "Succeeded"

},

"location": "northeurope",

"tags": {},

"kind": "Windows",

"id": "/subscriptions/xxxxxxxxxxxx/resourceGroups/rg-loganalytics/providers/Microsoft.Insights/dataCollectionRules/dcr-Operation-CatRoot2ErrorsAuxiliary",

"name": "dcr-Operation-CatRoot2ErrorsAuxiliary",

"type": "Microsoft.Insights/dataCollectionRules",

"etag": "\"ca00062f-0000-0c00-0000-66e566df0000\"",

"systemData": {

"createdBy": "mok@2linkit.net",

"createdByType": "User",

"createdAt": "2024-09-10T21:28:42.806711Z",

"lastModifiedBy": "mok@2linkit.net",

"lastModifiedByType": "User",

"lastModifiedAt": "2024-09-14T10:35:10.2224854Z"

}

}5. You are now almost good to go, now you just need to associate VMs, where you want to collect the content of the file and send it into the Auxiliary log table

NOTE:

- It may take 10-15 min before the Pipeline is active and data will be ready to flow.

- Remember you need to do some changes in the file (DBerror.txt) – or for example restart the VM before new data will come in, as it doesn’t take the prior content; only new.

Public preview limitations

Region

Americas

- Canada Central

- Central US

- East US

- East US 2

- West US

- South Central US

- North Central US

Asia Pacific

- Australia East

- Australia South East

Europe

- East Asia

- North Europe

- UK South

- Germany West Central

- Switzerland North

- France Central

Middle East

- Israel Central

Important info about Tables with the Auxiliary plan

- Are currently unbilled. There’s currently no charge for ingestion, queries, search jobs, and long-term retention.

- Do not support columns with dynamic data.

- Have a fixed total retention of 365 days.

- Support ISO 8601 datetime format only.

Limitations for Data Collection Rules that sends data to a table with an Auxiliary plan

- Can only send data to a single table.

- Can’t include a transformation.

1 thought on “Optimize Costs using Auxiliary Logs for Verbose Logging”