Azure includes lots of great technologies, which can be used for logging purpose. Currently, Microsoft is transitioning from v1-method (MMA) to v2-method using DCRs.

This article touches on understanding the main difference between the legacy method (v1-MMA) and the new DCR-method (v2). I am already a big fan of DCRs, check out why ?

This blog also gives a high-level overview of options, structured in

- Data-In (sources)

- Data-Transformation

- Data-Out

Furthermore the article also covers details on deprecation path of v1 technologies.

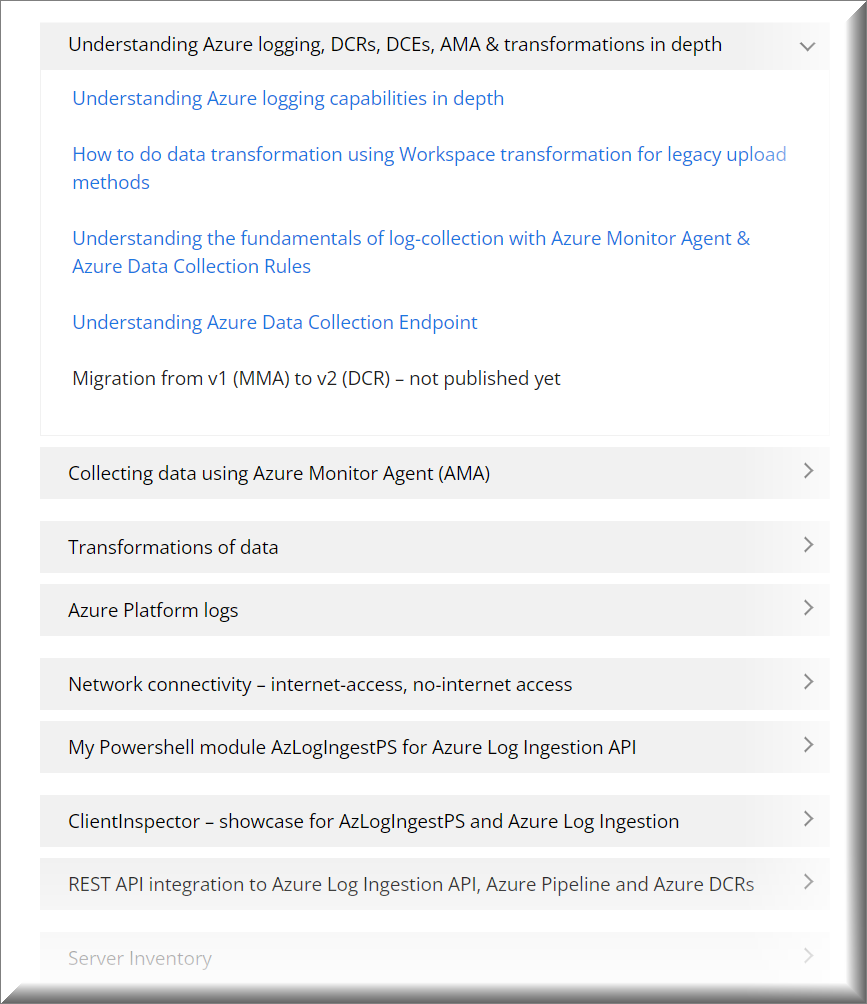

This blog-post is part of a series of blog posts to master Azure logging in depth (overview).

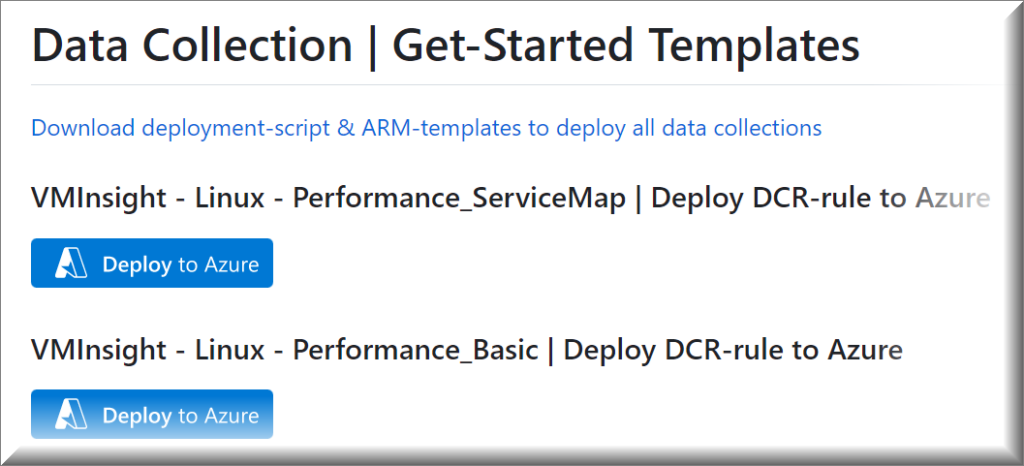

To get you started, you can find ARM-templates & scripts in my AzureLogLibrary (github). Details will be covered in the articles.

Quick Links

Technology overview

What is the change in architecture between v1 (MMA) and v2 (DCR)?

Data-in

Data-transformation

Supported ways of doing data transformation

Transformation using XPath

Transformation with Azure Monitor Agent (AMA) & Azure Data Collection Rules (DCR)

Transformation with Azure DCR-pipeline (Log Ingestion API) & Azure Data Collection Rule (DCR)

Transformation with Azure LogAnalytics Workspace Data Collection Rule (DCR)

Examples of transformations, based on Kusto syntax

Cost for transformations

Data-out

Azure Log technologies being deprecated

Accessing data using Kusto

Accessing data using Powershell

Technology overview

The following technologies are used to deliver data-collection, data-query and data-presentation in Azure.

| Abbreviation | Technology | Role | Usage |

| AMA | Azure Monitor Agent | Data-In (collection) | Agent (or Extension) running on Windows & Linux endpoints. Collects data defined in DCR |

| DCR | Azure Data Collection Rule | Data-Transformation | Definition (JSON-format), which includes: What to collect (source)? How to send data (streams) Format to send data in (schema) How to send data (extension) Extension to use (optional) Dataflow / transformations – what to do with the data Destinations (Where) to send data |

| DCE | Azure Data Collection Endpoint | Data-In (collection) | Log ingestion endpoint for some data which needs to be transformed during upload (e.g. REST api, IIS logs) |

| KQL | Kusto Query Language | Data-query | |

| WB | Azure Workbooks | Data-view | |

| DB | Azure Dashboard | Data-view |

What is the change in architecture between v1 (MMA) and v2 (DCR)?

For the last 5 years, I have been using the Log Analytics Data Collector API – also referred to ‘Azure Monitor HTTP Data Collector API’ – or my short name for it “MMA-method” (v1)

Don’t let yourself be confused, when you are searching the internet for ‘Azure Monitor HTTP Data Collector’ and it comes up saying it is in public preview. It is still the legacy API which will be replaced by Log Ingestion API and DCRs.

Product team quotes: “Data Collector API was never officially released or considered “complete”. We are going to update Data Collector API documentation as part of its deprecation cycle”

I have been using the API with my Powershell scripts to upload ‘tons’ of custom data into Azure LogAnalytics. On top, I provided 35 Azure dashboards, that gives me (and my customers) great insight to the health and security of their environment.

In v1, everything was defined on the backend using the concept of “solutions”. It was very hard to target from where you wanted to collect specific data. You were also limited with transformation possibilities, as you could only use XPath.

Microsoft has implemented v1 of XPath, which is very limited, as it doesn’t contain possibility to use for example like or contains.

In v2 using Data Collection Rule, you will have an intermediate – Azure Data Ingestion Pipeline, which handles the data upload from endpoints – based on Data Collection Rules. Data Collection Rules (DCRs) determine how to collect and process telemetry sent to Azure.

I am a big fan of Azure Data Ingestion Pipeline and Azure Data Collection Rules because:

- possibility to remove data before being sent into LogAnalytics (remove “noice” data/cost optimization, GDPR/compliance)

- possibility to add data before being sent into LogAnalytics

- possibility to merge data before being sent into LogAnalytics

- data quality is better for array data, as array data is converted into dynamic – whereas the old MMA-method would convert array data into strings

- support to send to other destinations

- support for file based logs collection (txt-logs, Windows Firewall logs)

- advanced support for advanced collection of performance data (including new collection type like SQL performance counters)

- support for SNMP traps logs collection

- security is based on Azure AD RBAC

- naming of data columns are prettier, as they contain the actual name – and not for example ComputerName_s indicating it is a string value

If I should mention some challenges, then they are:

- complexity is higher, because you have more “middle-tiers” involved (DCR, DCE)

- table/DCR/schema must be defined before sending data

I have built a Powershell module, AzLogDcrIngestPS, which deals with these challenges. You can check it out the documentation on github. It can also be downloaded on Powershell Gallery

Data-in (sources)

Microsoft supports data from the following sources (data-in):

| Collection source | Technologies required | Flow |

|---|---|---|

| (legacy) Performance Eventlog Syslog | MMA (legacy) | 1. MMA 2. Azure LogAnalytics |

| (legacy) API | HTTP Data Collection API (legacy) | 1. REST API endpoint 2. Azure LogAnalytics |

| Performance Eventlog Syslog | AMA DCR | 1. AMA 2. DCR ingestion pipeline 3. Azure LogAnalytics |

| Text log IIS logs Windows Firewall logs (preview) | AMA DCR DCE | 1. AMA 2. DCE 3. DCR ingestion pipeline 4. Azure LogAnalytics |

| SNMP traps | Linux with SNMP trap receiver AMA DCR (syslog file) -or- AMA DCR (syslog stream) | 1. AMA 2. DCR ingestion pipeline 3. Azure LogAnalytics |

| Change Tracking (legacy) | Change Tracking Extension (FIM) DCR | 1. FIM 2. DCR ingestion pipeline 3. Azure LogAnalytics |

| REST Log Ingestion API | REST endpoint DCR DCE | 1. REST endpoint 2. DCE 3. DCR ingestion pipeline 4. Azure LogAnalytics |

| Platform Metrics/Telemetry (standard) Azure PaaS | DCR (build-in, non-manageable) | 1. Azure Resource 2. DCR ingestion pipeline 3. Azure Monitor Metrics |

| Custom Metrics/Telemetry (custom app) | Windows (1): AMA DCR -or- Windows (2): Azure Diagnostics extension -or- API: Azure Monitor REST API -or- Linux: Linux InfluxData Telegraf agent (Linux) Azure Monitor output plugin | Windows (1): 1. AMA 2. DCR ingestion pipeline 3. Azure LogAnalytics Windows (2): 1. Azure Diagnostics 2. Azure LogAnalytics API: 1. REST endpoint 2. DCE 3. DCR ingestion pipeline 4. Azure LogAnalytics Linux: 1. Linux InfluxData 2. Azure Monitor output plugin 3. Azure LogAnalytics |

| Platform logs (diagnostics per resource) AllMetrics Resource logs (allLogs, audit) | Azure Policy (diagnostics) DCR | 1. Azure Resource 2. DCR ingestion pipeline 3. Azure LogAnalytics |

| Activity logs (audit per subscription) | Azure Policy (diagnostics) DCR | 1. Azure Resource 2. DCR ingestion pipeline 3. Azure LogAnalytics |

I will be covering collecting the above data sources in later blog posts, which can be found in the overview here

Data-transformation

As mentioned, data transformation is a new feature of Data Collection Rules which can be used together with AMA DCR, workspace DCR and Log Ingestion API.

Transformations defines which data should be sent through the pipeline.

If you want to exclude specific events, you will tell to exclude these events. Example if you want to exclude eventid 4662

source where (EventID != 4662)

Data transformations runs in the Azure Data Ingestion Pipeline and happens very fast as data is being uploaded.

Data transformations are defined in the transformKql property in the DCR section dataFlows.

dataFlows": [

{

"streams": [

"Microsoft-SecurityEvent"

],

"destinations": [

"DataCollectionEvent"

],

"transformKql": "source | where (EventID != 8002) and (EventID != 5058) and (EventID != 4662) ",

"outputStream": "Microsoft-SecurityEvent"

}Since the transformation is applied to each record individually, it can’t use any KQL operators that act on multiple records.

Only operators that take a single row as input and return no more than one row are supported.

For example, summarize isn’t supported since it summarizes multiple records. See Supported KQL features for a complete list of supported features.

As shown below, you can do great things with the concept of data transformation:

| Category | Details |

|---|---|

| Compliance | You may have a data source that sends information you don’t want stored for privacy or compliancy reasons – for example to support GDPR. Filter sensitive information. Filter out entire rows or just particular columns that contain sensitive information (UserNames, PersonalID, PhoneNumbers) Obfuscate sensitive information. For example, you might replace digits with a common character in an IP address or telephone number (40 178 xxx). |

| Enrich data | Use a transformation to add information to data that provides business context or simplifies querying the data later. Add a column with additional information. For example, you might add a column identifying whether an a server is internet-connected or with no-internet access. It can also be about IP address is internal or external. Add business specific information. For example, you might add a column showing company tenantId in case you have multiple tenants. |

| Cost optimizations | Since you’re charged by Gb, you want to filter out any data that you don’t require to reduce your costs. Remove entire rows. For example, you might want to remove all entries (rows) where the source is coming from a specific server if the events contains a specific eventid. Remove a column from each row. For example, your data may include columns with data that’s redundant or has minimal value. Maybe you parse RawData in Syslog into multiple columns, thne you need to remove the RawData column, when you have parsed the data. Parse important data from a column. You may have a table with valuable data buried in a particular column. . Examples of where data-transformation is useful: We want to remove specific security-events from a server, which are making lots of ”noise” in our logs due to a misconfiguration or error and it is impossible to fix it. We want to remove security events, which we might show with a high amount, but we want to filter it out like Kerberos computer-logon traffic. |

You can read about how to implement data transformation in this blog-post

Supported ways of doing data transformation

Currently, Microsoft supports doing transformation using 4 methods:

| Collection source | How | Purpose / limitatations |

|---|---|---|

| XPath | XML syntax in DCR | XPath implementation uses v1, which is very limited in functionality. |

| MMA-agent / Solutions (legacy): Performance Eventlog Syslog API (legacy): HTTP Data Collector API | Workspace transformation DCR | Only one transformation per table |

| All sources sending in using AMA | AMA transformation DCR | All DCRs do unions, so be aware of double data. Governance is important |

| REST API using Log ingestion API | Log Ingestion transformation DCR |

Transformation using XPath

Since AMA was launched in June 2021, it has supported doing transformation with XPath. This methods works fine and has helped my customers to save money.

Microsoft asked me to provide a statement about this in the officiel go-live announcement, when Azure Monitor Agent went GA in June 2021.

Here is an example of using XPath where I am collecting all security events, excluding any computer-related security-events like Computer logon-traffic from specific exchange servers.

Security!*[System[(band(Keywords,13510798882111488))]] and [(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE01$'])] and [(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE02$'])] and [(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE03$'])] and [(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE04$'])] and [(EventData[Data[@Name='TargetUserName'] != 'EXCHANGE05$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain\\EXCHANGE01$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain\\EXCHANGE02$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain\\EXCHANGE03$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain\\EXCHANGE04$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain\\EXCHANGE05$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain.DK\\EXCHANGE01$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain.DK\\EXCHANGE02$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain.DK\\EXCHANGE03$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain.DK\\EXCHANGE04$'])] and [(EventData[Data[@Name='SubjectUserName'] != 'domain.DK\\EXCHANGE05$'])]AMA is still supporting XPath, but I recommend to use data transformation using DCR-rules, as it solves some of the limitations in the XPath implementation in AMA.

XPath is a standard, currently at 3.1 (2017), but Microsoft chose to implement XPath v1 in AMA from 1999. V1 doesn’t support filtering with like or contains. Therefore it is hard to scale using xpath v1, if you need advanced features.

In the sample above, I had to explicitly define the server-names where I wanted to remove computer-related security events.

Transformation with Azure Monitor Agent (AMA) & Azure Data Collection Rules (DCR)

Transformation with Azure DCR-pipeline (Log Ingestion API) & Azure Data Collection Rule (DCR)

Transformation with Azure LogAnalytics Workspace Data Collection Rule (DCR)

Examples of transformations, based on Kusto syntax

To create a transformation, you will start by defining the query in LogAnalytics using Kusto. When the query is working, you will change the tablename to source – as shown below

| Kusto Query | Purpose | Transformation syntax for DCR ‘transformKql’ command |

|---|---|---|

| SecurityEvent | where (EventID != 12345) | Remove events with EventID 12345 in SecurityEvent table | source | where (EventID != 12345) |

| SecurityEvent | where (EventID != 8002) and (EventID != 5058) and (EventID != 4662) | Remove events with EventId 4662,5058,8002 in SecurityEvent table | source | where (EventID != 8002) and (EventID != 5058) and (EventID != 4662) |

| Event | where ( (EventID != 10016 and EventLog == “Application”) ) | Remove events with EventID 10016, if source is Application log | source | where ( (EventID != 10016 and EventLog == “Application”) ) |

| CommonSecurityLog | where (DeviceVendor !contains “sonicwall”) or ((DeviceVendor contains “sonicwall”) and (Activity contains “connection opened” or Activity contains “connection closed”) and (Protocol != “udp/dns”)) | remove all DNS lookups if coming from sonicwall only – not Cisco | source | where (DeviceVendor !contains “sonicwall”) or ((DeviceVendor contains “sonicwall”) and (Activity contains “connection opened” or Activity contains “connection closed”) and (Protocol != “udp/dns”)) |

| Inventory_CL | extend TimeGenerated = now() | Add new column TimeGenerated with the actual time (now), when data is coming in | source | extend TimeGenerated = now() |

If you are interested in learning more, please check out this topic in my tutorial blog

Cost for transformations

While transformations themselves don’t incur direct costs, the following scenarios can result in additional charges:

- If a transformation increases the size of the incoming data, such as by adding a calculated column, you’ll be charged the standard ingestion rate for the extra data.

- If a transformation reduces the incoming data by more than 50%, you’ll be charged for the amount of filtered data above 50%.

To calculate the data processing charge resulting from transformations, use the following formula: [GB filtered out by transformations] – ([Total GB ingested] / 2).

For example, if you ingest 100 GB of data and your transformations remove 70 GB, you’ll be charged for 70 GB – (100 GB / 2), which is 20 GB.

This calculation is done per data collection rule and per day basis.

To avoid this charge, it’s recommended to filter incoming data on the source level if possible. By doing so, you can reduce the amount of data processed by transformations and, therefore, minimize any additional costs.

For example you will modify your Active Directory Audit settings, if you have general events you want to filter out. Another example could be syslog events which you want to filter out. I have provided a blog post about this example

If Azure Sentinel is enabled for the Log Analytics workspace, there’s no filtering ingestion charge regardless of how much data the transformation filters.

Data-out

The concept of Data Collection Rules also includes the ability to send the data to multiple destinations.

Currently, DCRs support the following destinations:

| Collection source | Technologies required | Supported Targets |

|---|---|---|

| Performance Eventlog Syslog | AMA DCR | Azure LogAnalytics standard table |

| Text log IIS logs Windows Firewall logs (preview) | AMA DCR DCE | Azure LogAnalytics custom log table |

| SNMP traps | Linux with SNMP trap receiver AMA DCR (syslog file) -or- AMA DCR (syslog stream) | Azure LogAnalytics custom log table |

| Change Tracking (legacy) | Change Tracking Extension (FIM) DCR | Azure LogAnalytics standard table |

| REST Log Ingestion API | REST endpoint DCR DCE | Azure LogAnalytics standard table (CommonSecurityLog, SecurityEvents, Syslog, WindowsEvents) Azure LogAnalytics custom table |

| Platform Metrics/Telemetry (standard Azure PaaS) | DCR (build-in, non-manageable) | Azure Monitor Metrics |

| Custom Metrics/Telemetry (custom app) | Windows (1): AMA DCR -or- Windows (2): Azure Diagnostics extension -or- API: Azure Monitor REST API -or- Linux: Linux InfluxData Telegraf agent (Linux) Azure Monitor output plugin | Azure Monitor Metrics |

| Platform logs (diagnostics per resource) AllMetrics Resource logs (allLogs, audit) | Azure Policy (diagnostics) DCR | Azure LogAnalytics standard table |

| Activity logs (audit per subscription) | Azure Policy (diagnostics) DCR | Azure LogAnalytics standard table |

It is possible to send to multiple tables in LogAnalytics, which is covered in more details here

You should expect to see more ‘destinations’ in the future, DCRs can send data to.

I am really excited about the future 😄

Azure Log technologies being deprecated

The following technologies will be deprecated:

| Technology | Deprecation Time | Replacement Technology |

| Microsoft Monitoring Agent | Aug 2024 | Azure Monitor Agent |

| HTTP Data Collector API | N/A I expect it to be deprecated medio-2024 | Log Ingestion API |

| Azure LogAnalytics Custom Log table (v1 – MMA) | N/A I expect it to be deprecated medio-2024 | Azure LogAnalytics Custom Log table (v2 – DCR) |

Migration will be covered in a separate blog-post later. You can find the details here

Accessing data using Kusto

You can use Kusto (KQL) to query the data.

Here is an video which will demonstrate several Kusto commands

Accessing data using Powershell

You can use the Az.OperationalInsights module to access the data in Azure LogAnalytics tables. Below is an example.

You need to be validated using Connect-AzAccount (RBAC) before you can retrieve the data.

Connect-AzAccount

$LogAnalyticsWorkspaceId = "a8c786cb-e8c1-40b8-9eb9-xxxxxxxx"

$Query = @'

InvClientComputerInfoBiosV2_CL

| summarize TimeGenerated = arg_max(TimeGenerated,*) by Computer

| join (InvClientComputerInfoSystemV2_CL

| summarize TimeGenerated = arg_max(TimeGenerated,*) by Computer)

on $left.Computer == $right.Computer

| join (InvClientComputerOSInfoV2_CL

| summarize TimeGenerated = arg_max(TimeGenerated,*) by Computer)

on $left.Computer == $right.Computer

| project Computer, UserLoggedOn, SerialNumber, Manufacturer, PCSystemType, SystemFamily, Model, Windows=Caption2, WindowsVersion=Version1, TimeGenerated

'@

write-output "Collecting computer information from LogAnalytics"

$Query = Invoke-AzOperationalInsightsQuery -WorkspaceId $LogAnalyticsWorkspaceId -Query $Query

$ComputerInfoArray = $Query.Results

$ComputerInfoArray

Have you been successful or been able to create pickup Custom IIS Logs using the DCR method, that contain the X-Forwarded-For column/field? It’s considered Custom since X-Forwarded-For field… isn’t natively pickedup in IIS Logs. Even though it is just a setting in IIS to include that column,

Then in addition parsing all fields into its own Columns?

Microsoft are working on this right now

“We are looking at supporting custom fields in IIS . The most asked for is x-forwarded. Are there more fields that we should consider?”

When creating a DCR, does it matter if you create a datasource on a non-IIS server when collecting W3C formatted IIS logs? I’m attempting to collect IIS logs from a log center server that does not run IIS. We have the logs pushed to this central location. After installing the AMA the server does have a heartbeat but I can’t get the log collection to work, although have been successful on hybrid servers that have IIS running. I am using a hybrid solution on arc enabled servers.

You have to define the path where the iis logs are placed