Have you ever had a need to collect vital configuration status (inventory) from thousands of servers – with a defined recurrence frequency ?

or run a script to mitigate local issues for desired state purpose?

or quickly run a command or script on thousands of servers – or set reg-key, where you for example couldn’t wait for AD Policies auto-refresh to happen?

This is a blog with an example of how to do this using Azure Extensions – with focus on Azure Custom Scripts extension with the power of Azure Arc and Azure Compute

Background

Previously, I was using different methods (ConfigMgr, Remote PS Session, etc.) depending on what my customers desired, but today I see many customers transitioning to cloud native e.g. migrating from SCCM to Intune on workstations – and using cloud native services for servers utilizing the power of Azure Arc and Azure Compute.

The main problem with the “old methods” that I experience is lack of performance (or delays), as it will take a significant time to collect inventory data from thousands of machines, if you are using remote Powershell session connecting to thousands of servers.

I wanted a multi-threaded method where I could get my task done within 2-4 min, so I decided on using Azure Extensions – with the power of Azure Arc and Azure Compute

Azure Extensions are part of Microsoft Azure infrastructure services. VM extensions are software components that extend VM functionality and simplify various VM management operations.

This blog is about how to use Azure Custom Script Extension – and have the scripts run as Jobs within Azure. I use this method to run a script on thousands of servers with completion within a few minutes, as it runs multi-threaded as jobs inside Azure.

Before going into deep-dive with Azure Custom Extension, I will briefly introduce another extension, Azure Desired State Configuration (DSC)

Azure Desired State Configuration (DSC)

The primary use case for the Azure Desired State Configuration (DSC) extension is to bootstrap a VM to the Azure Automation State Configuration (DSC) service. The service provides benefits that include ongoing management of the VM configuration and integration with other operational tools, such as Azure Monitoring. Using the extension to register VM’s to the service provides a flexible solution that even works across Azure subscriptions.

You can use the DSC extension independently of the Automation DSC service. However, this will only push a configuration to the VM. No ongoing reporting is available, other than locally in the VM.

See more information here

Before you enable the DSC extension, you should know that a newer version of DSC is now generally available, managed by a feature of Azure Automange named machine configuration. The machine configuration feature combines features of the Desired State Configuration (DSC) extension handler, Azure Automation State Configuration, and the most commonly requested features from customer feedback. Machine configuration also includes hybrid machine support through Arc-enabled servers.

Azure Custom Scripts

The Azure Custom Script Extension downloads and runs scripts on Azure virtual machines (VMs). This extension is useful for post-deployment configuration, software installation, or any other configuration or management task. You can download scripts from Azure Storage or GitHub, or provide them to the Azure portal at extension runtime.

The Custom Script Extension integrates with Azure Resource Manager templates. You can also run it by using the Azure CLI, PowerShell, the Azure portal, or the Azure Virtual Machines REST API.

As mentioned, the originally intent of custom scripts were to use it as part of the provisioning to finalize any configurations of a server. Therefore I know, that I’m stretching a bit with my use-cases – but hey, it works and it is fast 🙂

I use Custom Scripts for 2 primary purposes:

- Collection of vital configurations / Inventory from server

- I have an inventory script collecting information from servers and upload the data into Azure Loganalytics custom logs.

- On top of this, I have created +35 dashboards with related information (Defender A/V, Bitlocker, Windows update status, etc).

- Collection of inventory will both run on domain and workgroup-connected servers

- Script runs under LOCAL SYSTEM account

- Desired state mitigation / self-healing / soft-repairs

- I have a script, which will check for local issues and mitigate these issues on servers, in case servers are ‘drifting’ away from a desired state. This could be for example:

- force soft-repair of Defender A/V with signature reset + signature update – in case a server is not updating or last update was more than 2 days ago

- force windows update soft-repair – in case windows update gets corrupted

- force soft-repair of group policy local cache reset – in case group policy cannot update

- Send email about wmi issues in case wmi gets corrupted

- I have a script, which will check for local issues and mitigate these issues on servers, in case servers are ‘drifting’ away from a desired state. This could be for example:

Operating system

The Custom Script Extension for Windows will run on these supported operating systems:

- Windows Server 2008 R2

- Windows Server 2012

- Windows Server 2012 R2

- Windows Server 2016

- Windows Server 2016 Core

- Windows Server 2019

- Windows Server 2019 Core

- Windows Server 2022

- Windows Server 2022 Core

- Windows 10

- Windows 11

Implementation

Instead of setting up local scheduled tasks, I decided on this design:

SourcePath for scripts is a file path (UNC) in the automation-environment, where I keep my scripts that will run on the local servers.

Deployment-source is a Azure storage account. Normally I configure the storage account to be only accessible from the internal network (no public endpoint, primary endpoint connection has been enabled)

Target is both native Azure VMs and on-prem Azure Arc enabled servers.

I have chosen to do the implementation in 6 steps using the script provided on my github:

- Variables

- Scoping with support for advanced filtering capabilities like exclude subscriptions, resource groups, resource starting with or specific resources

- Deployment information – storage account, storage account key, script(s) to run. For demonstration purpose, I have provided so you can put in the storage account access key in the script, but please remember to store and retrieve this from a Azure Keyvault before going into production.

- Building scope of servers – with support for any exclusions as defined in the variables

- I am using Azure ARG to get list of hybrid servers and native VMs – with exclusion support

- Update of script(s) to Azure storage account from Sourcepath (UNC-path)

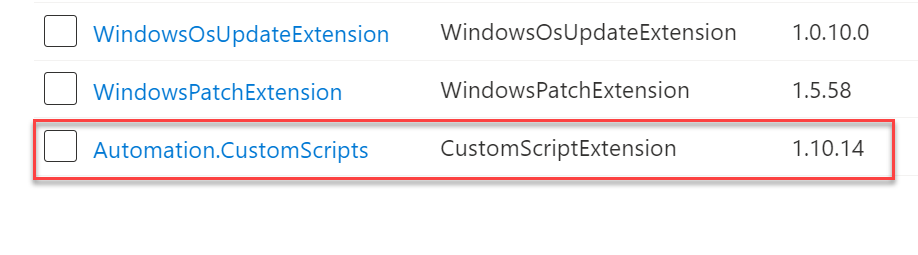

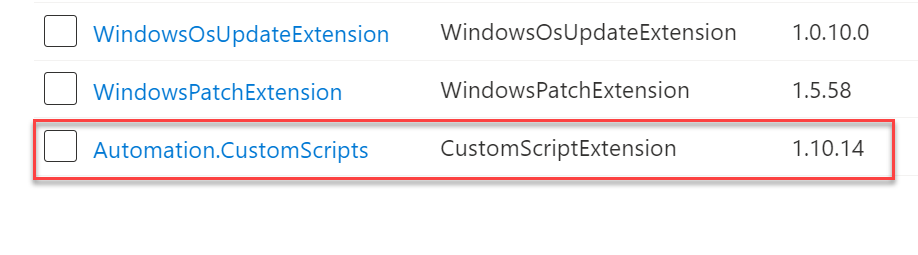

- Extension status – build overview including list of any extensions in failed status

- I am using Azure ARG to get the extension status

- Deployment of Azure Custom script(s) job to Azure Arc hybrid servers – script will run AsJob

- Deployment of Azure Custom Script(s) to native Azure VMs – script will run AsJob

Script will be downloaded into C:\Packages\Plugins\Microsoft.Compute.CustomScriptExtension\<version>\Downloads\<n>

Logs can be found locally in C:\WindowsAzure\Logs\Plugins\Microsoft.Compute.CustomScriptExtension

Output will also be collected and shown in the extension in Azure as shown below.

Main program | Deployment of Azure Custom Script to Azure Arc hybrid servers as job

$Scope_HybridAz = $Global:HybridVMsInScope_All | Where-Object { ($_.osType -eq "Windows") -and ($_.osSku -notlike "*2008 R2*") -and ($_.osSku -notlike "*Windows 10*") -and ($_.name -notin $Issues_CustomScripts_List) }

ForEach ($Server in $Scope_HybridAz)

{

$ReRunTime = (Get-date -format "yyyy-MM-dd_HH:mm:ss")

If ($server.type -eq "microsoft.hybridcompute/machines")

{

$Context = Get-AzContext

If ($Context.subscriptionId -ne $Server.subscriptionId)

{

$SetContext = Set-AzContext -Subscription $Server.subscriptionId

}

Write-Output ""

Write-Output "Hybrid AzArc: Starting script $($global:CustomScript_RunScriptName) on server as job -> $($Server.name)"

# Adding lastrun to settings, causing job to re-run as it is changed !

$Settings = @{"fileUris" = $global:CustomScript_FileUri; "lastrun" = $ReRunTime};

$result = Set-AzConnectedMachineExtension -ResourceGroupName $Server.resourceGroup`

-Location $Server.location `

-MachineName $Server.name `

-Name $global:CustomScript_RunScriptName `

-Publisher "Microsoft.Compute" `

-ExtensionType "CustomScriptExtension" `

-Settings $Settings `

-ProtectedSettings $ProtectedSettings `

-ForceRerun $ReRunTime `

-AsJob

}

}Main program | Deployment of Azure Custom Script to native Azure VMs as job

$Scope_NativeAz = $Global:NativeVMsInScope_All | Where-Object { ($_.osType -eq "Windows") -and ($_.osName -notlike "*2008 R2*") -and ($_.osName -notlike "*Windows 10*") -and ($_.name -notin $Issues_CustomScripts_List) }

ForEach ($Server in $Scope_NativeAz)

{

$ReRunTime = (Get-date -format "yyyy-MM-dd_HH:mm:ss")

If ($Server.type -eq "microsoft.compute/virtualmachines")

{

$Context = Get-AzContext

If ($Context.subscriptionId -ne $Server.subscriptionId)

{

$SetContext = Set-AzContext -Subscription $Server.subscriptionId

}

# Adding lastrun to settings, causing job to re-run as it is changed !

$Settings = @{"fileUris" = $global:CustomScript_FileUri; "lastrun" = $ReRunTime};

Write-Output ""

Write-Output "Native Az: Starting script $($global:CustomScript_RunScriptName) on VM as job -> $($Server.Name)"

$Result = Set-AzVMExtension -ResourceGroupName $Server.resourceGroup`

-Location $Server.location `

-VMName $Server.name `

-Name $global:CustomScript_RunScriptName `

-Publisher "Microsoft.Compute" `

-ExtensionType "CustomScriptExtension" `

-TypeHandlerVersion "1.10" `

-Settings $Settings `

-ProtectedSettings $ProtectedSettings `

-AsJob `

-ForceRerun $ReRunTime

}

}

Tips and tricks

- The highest failure rate for this extension is due to syntax errors in the script. Test that the script runs without errors. Put additional logging into the script to make it easier to find failures.

- Write scripts that are idempotent, so running them more than once accidentally won’t cause system changes.

- Ensure that the scripts don’t require user input when they run.

- The script is allowed 90 minutes to run. Anything longer will result in a failed provision of the extension.

- Don’t put reboots inside the script. This action will cause problems with other extensions that are being installed, and the extension won’t continue after the reboot.

- If you have a script that will cause a reboot before installing applications and running scripts, schedule the reboot by using a Windows Scheduled Task or by using tools such as DSC, Chef, or Puppet extensions.

- Don’t use the Custom Script Extension to run

Update-AzVMwith the same VM as its parameter, because it will wait for itself. - Don’t run a script that will cause a stop or update of the VM agent. It might leave the extension in a transitioning state and lead to a timeout.

- When the script is running, you’ll only see a “transitioning” extension status from the Azure portal or CLI. If you want more frequent status updates for a running script, you’ll need to create your own solution.

- The Custom Script Extension doesn’t natively support proxy servers. However, you can use a file transfer tool that supports proxy servers within your script, such as Invoke-WebRequest.

- Be aware of non-default directory locations that your scripts or commands might rely on. Have logic to handle this situation.

- The Custom Script Extension runs under the LocalSystem account.

Trick to make the script re-run

The trick to force a script to re-run is to modify the settings in the job. Below I add the parameter lastrun with updated date/time. This way, the Custom Script was changed (on purpose) and run it again 🙂

# Adding lastrun to settings, causing job to re-run as it is changed ! $ReRunTime = (Get-date -format "yyyy-MM-dd_HH:mm:ss") $Settings = @{"fileUris" = $global:CustomScript_FileUri; "lastrun" = $ReRunTime};

Limitations

Currently, Microsoft only supports one extension with the type Custom Scripts – but the extension supports running multiple scripts.

Remember to remove any custom scripts extensions used for the initial provisioning of the machines. Otherwise your deployment will fail.

Troubleshooting

How to remove failed custom extension scripts?

In case your custom script fails on a server, it should automatically go into a failed state. Then you are able to remove the extension by using Remove-AzConnectedMachineExtension (Azure Arc) or Remove-AzVMExtension (native VM)

You can see an example of this at the last section of my Remove-Automation.CustomScripts.ps1 using this link on github)

Powershell script causing custom extension to go into a wait-mode

In some cases, I have also seen the powershell script to fail, caused by a misconfiguration in the powershell script, and when this happens, Azure will stay in a “waiting-mode”, as it is looking for the powershell process to terminate.

It is easy to reproduce this failure by running the script manually on the server, where it will go into a wait.

I have provided a script on my github, that handles this situation. Currently, the script will terminate ANY running powershell sessions. If you want to finetune the script, then look for any powershell sessions with open handles in c:\packages\plugins

Script will also clean-up the local custom script and restart Azure Arc. Within a few seconds, Azure will pickup the change and the backend will be cleaned up and extension will be removed.

This article is life saver thank you 🙂