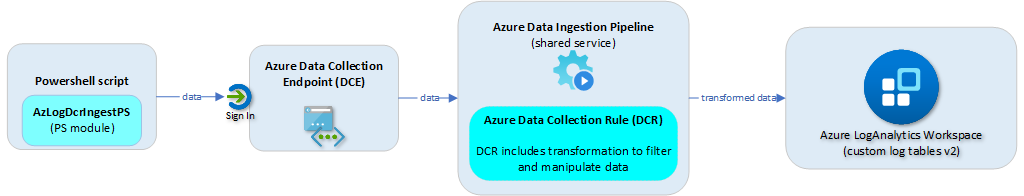

If you are sending data using HTTP Data Collector API (REST) today, you should continue reading, as this API will be deprecated, as part of the transition to Log ingestion API using Azure Data Collection Rules, Azure Pipeline, Azure LogAnalytics custom tables (v2).

As you can see from the illustrations above more components (DCR, DCR, Pipeline, Schema) are added, which also increases the complexity and challenges to take into account:

- Creation of DCR + tables before sending data

- Dependencies to DCE, which must exist

- Timing / delays when doing automations

- Schema for data must be defined in both DCR and custom table (v2)

- Naming conventions & limitations / Prohibited names

- Deal with new properties – support both merge and overwrite

- Upload changes (32 mb -> 1 mb) per JSON (batches, calculations)

- Data manipulations of source data (filtering, remove)

Your new helper – AzLogDcrIngestPS 🙂

I have built a Powershell module, AzLogDcrIngestPS which will ease the steps, if you want to send any data to Azure LogAnalytics custom logs (v2) – using the new features of Azure Log Ingestion Pipeline, Azure Data Colection Rules & Log Ingestion API.

Think of this module as an “AnyConnector”, which can be used to send data from any 3rd party source using PS script (intermediate) or use as part of a script collecting data from endpoint. It can be used in Powershell scripts, Azure Function, Azure Automation, etc.

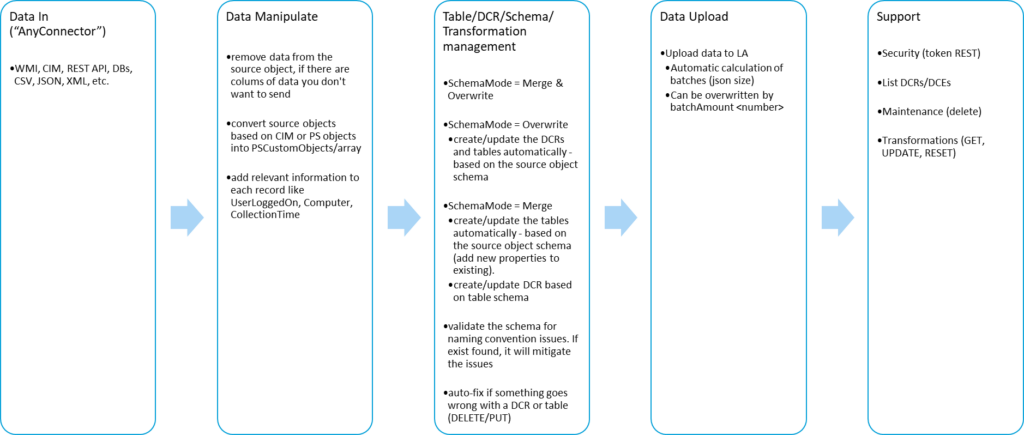

The 25 functions in AzLogDcrIngestPS will help you with:

- data manipulation before sending data in

- table / dcr / schema / transformation management

- data upload using Azure Log Ingestion Pipeline / Log Ingestion API

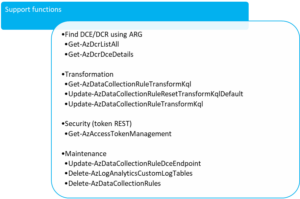

- support/security

Cool features of AzLogDcrIngestPS are:

- create/update the DCRs and tables automatically – based on the source object schema

- validate the schema for naming convention issues. If exist found, it will mitigate the issues

- update schema of DCRs and tables, if the structure of the source object changes

- auto-fix if something goes wrong with a DCR or table

- can remove data from the source object, if there are colums of data you don’t want to send

- can convert source objects based on CIM or PS objects into PSCustomObjects/array

- can add relevant information to each record like UserLoggedOn, Computer, CollectionTime

See AzLogDcrIngestPS functions in action 🙂

I have provided 4 demos for you to try. I have also provided videos for you to check out.

Alternatively, I have built a cool showcase – ClientInspector (v2), free for the community, where you can see how you can use the funtions from the AzLogDcrIngestPS module.

ClientInspector can bring back data from your clients using Azure Log Ingestion Pipeline, Azure Data Collection Rules, Azure LogAnalytics; view them with Azure Monitor & Azure Dashboards – and get “drift-alerts” using Microsoft Sentinel. it includes tons of great information and dashboards to see if you are in control with your clients – or something is drifting from desired state.

Videos

Video 3m 19s – Running ClientInspector using commandline (normal mode)

Video 1m 40s – Automatic creation of 2 tables & DCRs (verbose mode)

Video 1m 37s – Automatic creation of 2 tables & DCRs (normal mode)

Video 1m 34s – See schema of DCR and table)

Video 2m 19s – Data manipulation

Video 1m 58s – Kusto queries against data

Video 3m 01s – Dashboards

Video 0m 48s – Sample usage of data – lookup against Lenovo warranty db

Video 7m 25s – Deployment via ClientInspector DeploymentKit

Download latest version

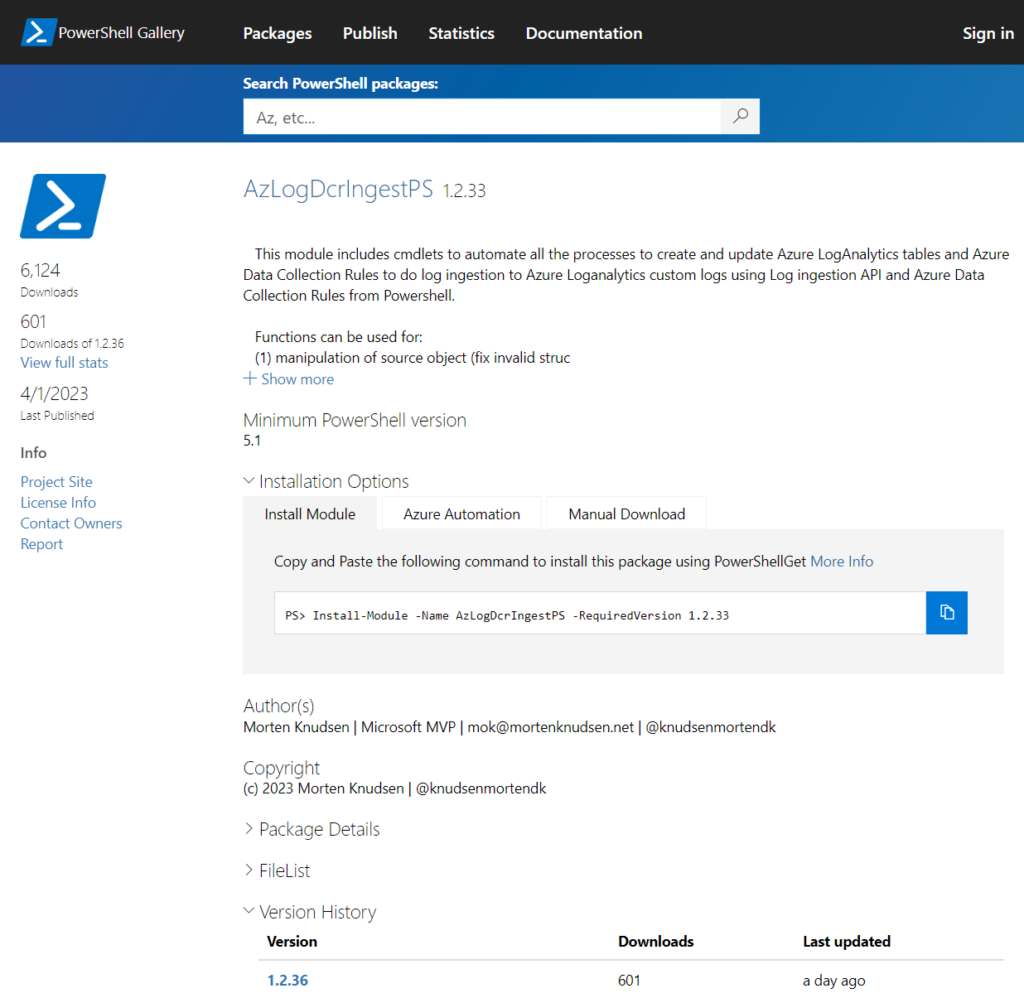

You can download latest version of AzLogDcrIngestPS here – or install from Powershell Gallery:

Install AzLogDcringestPS from Powershell Gallery

install-module AzLogDcrIngestPS

Download AzLogDcringestPS module from this Github repositry

Quick links for more information

How to get started ?

Background for building this Powershell module

Deep-dive about Azure Data Collection Rules (DCRs)

Deep-dive about Log Ingestion API

Architecture, Schema & Networking

Source data – what data can I use ?

Example of how to use the functions

How can I modify the schema of LogAnalytics table & Data Collection Rule, when the source object schema changes ?

How to enable verbose-mode & get more help?

Integration of AzLogDcrIngest in your scripts.

Function synopsis

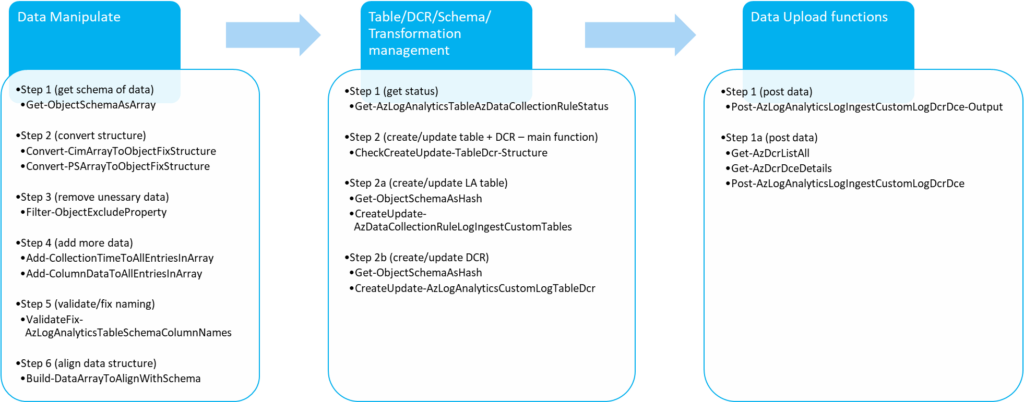

Detailed – Data Manipulation

Detailed – Table/DCR/Schema management

Detailed – Data Out (upload to Azure LogAnalytics) Detailed – Support functions

Tips & Tricks – More help

Big thanks – you are rock stars 🙂

Lastly, I would like to give big credits to a few people, who I have worked together with on building AzLogDcrIngestPS Powershell module and my daily work with the Azure log & viewing capabilities:

| Name | Role |

|---|---|

| Ivan Varnitski | Program Manager – Azure Pipeline |

| Evgeny Ternovsky | Program Manager – Azure Pipeline |

| Nick Kiest | Program Manager – Azure Data Collection Rules |

| Oren Salzberg | Program Manager – Azure LogAnalytics |

| Guy Wild | Technical Writer – Azure LogAnalytics |

| John Gardner | Program Manager – Azure Workbooks |

| Shikha Jain | Program Manager – Azure Workbooks |

| Ingo Bringemeier | Principal Program Manager – Azure Monitor |

| Shayoni Seth | Program Manager – Azure Monitor Agent |

| Jeff Wolford | Program Manager – Azure Monitor Agent |

| Xema Pathak | Program Manager – Azure VMInsight (integration to Azure Monitor Agent) |

How to get started?

The 3 steps to get started with sending logs through Azure Pipeline using Log Ingestion API, Data Collection Rules and AzLogDcrIngestPS are:

Step 1 – Get demo environment up and running.

Download the Powershell script Step1-Deployment-DemoEnvironment

Modify the SubscriptionId and TenantId in the header before running the deployment

The deployment-script will setup the following tasks:

- create Azure Resource Group for Azure LogAnalytics Workspace

- create Azure LogAnalytics Workspace

- create Azure App registration used for upload of data by demo-upload script

- create Azure service principal on Azure App

- create needed secret on Azure app

- create the Azure Resource Group for Azure Data Collection Endpoint (DCE) in same region as Azure LogAnalytics Workspace

- create the Azure Resource Group for Azure Data Collection Rules (DCR) in same region as Azure LogAnalytics Workspace

- create Azure Data Collection Endpoint (DCE) in same region as Azure LogAnalytics Workspace

- delegate permissions for Azure App on LogAnalytics workspace

- delegate permissions for Azure App on Azure Resource Group for Azure Data Collection Rules (DCR)

- delegate permissions for Azure App on Azure Resource Group for Azure Data Collection Endpoints (DCE)

$UseRandomNumber = $true

If ($UseRandomNumber)

{

$Number = [string](Get-Random -Minimum 1000 -Maximum 10000)

}

Else

{

$Number = "1"

}

# Azure App

$AzureAppName = "Demo" + $Number + " - Automation - Log-Ingestion"

$AzAppSecretName = "Secret used for Log-Ingestion"

# Azure Active Directory (AAD)

$TenantId = "<xxxxxx>" # "<put in your Azure AD TenantId>"

# Azure LogAnalytics

$LogAnalyticsSubscription = "<xxxxxx>" # "<put in the SubId of where to place environment>"

$LogAnalyticsResourceGroup = "rg-logworkspaces-client-demo" + $Number + "-t"

$LoganalyticsWorkspaceName = "log-management-client-demo" + $Number + "-t"

$LoganalyticsLocation = "westeurope"

# Azure Data Collection Endpoint

$AzDceName = "dce-" + $LoganalyticsWorkspaceName

$AzDceResourceGroup = "rg-dce-" + $LoganalyticsWorkspaceName

# Azure Data Collection Rules

$AzDcrResourceGroup = "rg-dcr-" + $LoganalyticsWorkspaceName

$AzDcrPrefix = "clt"

$VerbosePreference = "SilentlyContinue" # "Continue"Step 2 – Adjust the demo-script with the needed variables (sample below).

Demo-script can also be found here

##########################################

# VARIABLES

##########################################

<# ----- onboarding lines ----- BEGIN #>

$TenantId = "xxxxxxxxxxxxf63-9a77-ec94786b7c9e"

$LogIngestAppId = "xxxxxxxxxxxx-b45b-fe5e78392285"

$LogIngestAppSecret = "xxxxxxxxxxxx_NJFrBH_o-QdNR1Ga.T"

$LogAnalyticsWorkspaceResourceId = "/subscriptions/xxxxxx/resourceGroups/rg-logworkspaces-client-demo1-t/providers/Microsoft.OperationalInsights/workspaces/log-management-client-demo1-t"

$DceName = "dce-log-management-client-demo1-t"

$AzDcrResourceGroup = "rg-dcr-log-management-client-demo1-t"

$AzDcrSetLogIngestApiAppPermissionsDcrLevel = $false

$AzDcrPrefix = "clt" # used to make it easy to find the DCRs when searching afterwards

$AzLogDcrTableCreateFromReferenceMachine = @() # you will add your machine like @("MyDeviceName")

$AzLogDcrTableCreateFromAnyMachine = $true

$global:Verbose = $true

<# ----- onboarding lines ----- END #>

Step 3 – Run demos

You can now run the different sections in the script and see the demos. The demos will use most functions in AzLogDcrIngestPS

Start by running lines 1-275, which will load the initial header and build variables

Demo #1 will demonstrate data manipulation + show schema content

Demo #2 will demonstrate collection data + create LogAnalytics table + DCR + send data

Demo #3 will demonstrate collection of data, remove unnecessary data-properties, create schema with modified structure

Demo #4 will demonstrate schema change of existing table

NOTE:

Have patience 🙂

Making schema changes + creating new pipelines will require 10-15 min delays right now. Data WILL come – have patience.

When the DCR + table + schema is in place, normal upload of data will happen very fast afterwords.

I have outlined the things to notice during the demos – run the lines one by one (sample below)

#-----------------------------------------------------------------------------------------------

# DEMO DEEP-DIVE !!!

#-----------------------------------------------------------------------------------------------

#-----------------------------------------------------------------------------------------------

# Notice: Orignal data source doesn't contain ComputerFqdn, Computer, DataCollectionTime

# Notice: Object shows 5 data - but schema shows more properties

#-----------------------------------------------------------------------------------------------

# show content of $OrgVar (original data-array)

$OrgVar[0] | fl

# show schema of $OrgVar (original data-array)

Get-ObjectSchemaAsArray -Data $OrgVar[0]

#-----------------------------------------------------------------------------------------------

# Notice: modified object (uploaded) contains Computer, ComputerFqdn, DataCollectionTime

# Notice: modified object shows all data

#-----------------------------------------------------------------------------------------------

# show content of $DataVariable (modified data-array)

$DataVariable[0] | fl

# show schema of $DataVariable (modified data-array)

Get-ObjectSchemaAsArray -Data $DataVariable[0]Architecture, Schema & Networking

Below you can see the flow of the data upload. Data will be uploaded into custom logs (v2) in Azure LogAnalytics workspace – using Log ingestion API, Azure Data Collection Rules (DCR) and Azure Data Collection Endpoints (DCE).

Schema

Both the DCR and LogAnalytics table has a schema, which needs to match the schema of the source object. This is handled by using functions in AzLogDcrIngestPS module.

Video 1m 40s – Automatic creation of 2 tables & DCRs (verbose mode)

Video 1m 37s – Automatic creation of 2 tables & DCRs (normal mode)

Video 1m 34s – See schema of DCR and table)

AzLogDcrIngestPS supports 2 modes for managing the schema: Merge and Overwrite

SchemaMode = Merge (default)

If you set SchemaMode = Merge, then new properties from the source object will be added (merged) into the current schema of the log analytics. DCR will import the schema from log analytics table to ensure they are identically.

Default mode is Merge, if you don’t define the variable SchemaMode on the functions: CheckCreateUpdate-TableDr-Structure CreateUpdate-AzLogAnalyticsCustomLogTableDcr CreateUpdate-AzDataCollectionRuleLogIngestCustomLog

SchemaMode = Overwrite

If you set SchemaMode = Overwrite, then the schema in DCR and table will be overwritten (updated) – based on the source object schema.

Networking

You have 3 options for connectivity to Azure for data upload:

| Upload method | Connectivity Details | OS compliance |

|---|---|---|

| Public access | REST endpoint sends to DCE via public IP of DCE | Endpoint supports TLS 1.2 |

| Private access | REST endpoint sends to DCE via private link of DCE | Endpoint supports TLS 1.2 |

| Log-hub | REST endpoint sends data via log-hub – a concept I have built. | Endpoint doesn’t support TLS 1.2. Azure will not accept connectivity from these devices directly |

Internet-connected endpoints – OS-level compliance

You need to allow the following endpoints in your firewall:

| Endpoint | Purpose | Port | Direction | Bypass HTTPS Inspection |

|---|---|---|---|---|

| global.handler.control.monitor.azure.com | Access control service | Port 443 | Outbound | Yes |

| <dce logs ingestion uri> sample https://dce-log-platform-management-client-demo-p-iur0.westeurope-1.ingest.monitor.azure.com | Ingest logs data | Port 443 | Outbound | Yes |

No Internet access or OS-level incompliance fx. running TLS 1.0/1.1

Check out the log-hub concept using this link

Security

Code signing

Both the ClientInspector.ps1-file and the AzLogDcrIngestPS module (AzLogDcrIngest.psm1) are signed with my code signing certificate (2LINKIT – my company). This way you can run it, if you require scripts to be signed.

Of course you can also choose to sign it with your own internal code signing certificate.

Please download the public key certificate and put it into your ‘trusted publisher’ container to trust the publisher (2LINKIT – my company). You can deploy this using Intune or Group Policy.

Intune deployment doesn’t require trusted publisher to be in place

By default Intune will do a BYPASS when running a remediation scripts.

Azure app for log ingestion & table/dcr schema management

You can choose to have one app for both log ingestion and table/DCR schema management, if you want to keep it . Alternative you can choose to have 2 Azure apps (recommended) to separate the log ingestion process with the table/DCR schema management process.

One Azure app for both log ingestion and table/schema management

If you want to keep it simple, you can choose to go with a single Azure app that is used for both log ingestion and table/schema management.

You will need to define variables in your script for the AppId, Secret and TenantId

##########################################

# VARIABLES

##########################################

$TenantId = "xxxxxx"

$LogIngestAppId = "xxxxxx"

$LogIngestAppSecret = "xxxxx"

You need to set permissions according to these settings:

| Target | Delegation To | Azure RBAC Permission | Comment |

|---|---|---|---|

| Azure Resource Group for Azure Data Collection Rules | Azure app used for log ingestion | Monitoring Publisher Metrics | used to send in data |

| Azure Resource Group for Azure Data Endpoint | Azure app used for log ingestion | Reader | needed to retrieve information about DCE – used as part of uploading data |

| Azure Resource Group for Azure Data Collection Rules | Azure app used for log ingestion | Contributor | needed to send in data |

| Azure Resource Group for Azure Data Collection Endpoint | Azure app used for log ingestion | Contributor | needed to create/update DCEs (if needed after deployment) |

| Azure LogAnalytics Workspace | Azure app used for log ingestion | Contributor | needed to create/update Azure LogAnaltyics custom log tables |

Two Azure apps to separate log ingestion and table/DCR schema management

If you want to separate the log ingestion process with the table/DCR schema management process, you can do this by having one more Azure app, which is used for table/dcr/schema management.

You need to set permissions according to these settings:

| Target | Delegation To | Azure RBAC Permission | Comment |

|---|---|---|---|

| Azure Resource Group for Azure Data Collection Rules | Azure app used for log ingestion | Monitoring Publisher Metrics | used to send in data |

| Azure Resource Group for Azure Data Endpoint | Azure app used for log ingestion | Reader When you run this script, it will configure the log ingestion account with Contributor permissions, if you run with default config. This configuration must be adjusted, so the logestion app will only need Reader permissions. | needed to retrieve information about DCE – used as part of uploading data |

| Azure Resource Group for Azure Data Collection Rules | Azure app used for table/DCR management | Contributor | needed to send in data |

| Azure Resource Group for Azure Data Collection Endpoint | Azure app used for table/DCR management | Contributor | needed to create/update DCEs and also needed to create/update an DCR with referrences to a DCE |

| Azure LogAnalytics Workspace | Azure app used for table/DCR management | Contributor | needed to create/update Azure LogAnaltyics custom log tables |

Source data – what data can I use ?

You can use any source data which can be retrieved into Powershell (wmi, cim, external data, rest api, xml-format, json-format, csv-format, etc.)

It is very important to understand, that the data typically needs to be manipulated before sending them – to ensure they are valid and any irrelevant data has been removed.

ClientInspector uses all of the 25 functions within the Powershell module, AzLogDcIngestPS, to handle source data manipulation to remove “noice” in data, to rename prohibited colums in tables/DCR – and support needs for transparency with extra insight like UserLoggedOn, CollectionTime, Computer:

Example of how to use the functions

Normally I would structure my scripts with the same 4-phased structure – sample below from ClientInspector:

Phase 1/4 – Variables (naming – where to send the data)

#-------------------------------------------------------------------------------------------

# Variables

#-------------------------------------------------------------------------------------------

$TableName = 'InvClientComputerInfoSystemV2' # must not contain _CL

$DcrName = "dcr-" + $AzDcrPrefixClient + "-" + $TableName + "_CL"

Phase 2/4 – Data Collection

#-------------------------------------------------------------------------------------------

# Collecting data (in)

#-------------------------------------------------------------------------------------------

Write-Output ""

Write-Output "Collecting Computer system information ... Please Wait !"

$DataVariable = Get-CimInstance -ClassName Win32_ComputerSystem

Phase 3/4 – Data Manipulation (ensure data is in correct format and any “noice” is removed and relevant information is added)

#-------------------------------------------------------------------------------------------

# Preparing data structure

#-------------------------------------------------------------------------------------------

# convert CIM array to PSCustomObject and remove CIM class information

$DataVariable = Convert-CimArrayToObjectFixStructure -data $DataVariable -Verbose:$Verbose

# add CollectionTime to existing array

$DataVariable = Add-CollectionTimeToAllEntriesInArray -Data $DataVariable -Verbose:$Verbose

# add Computer & UserLoggedOn info to existing array

$DataVariable = Add-ColumnDataToAllEntriesInArray -Data $DataVariable -Column1Name Computer -Column1Data $Env:ComputerName -Column2Name UserLoggedOn -Column2Data $UserLoggedOn

# Validating/fixing schema data structure of source data

$DataVariable = ValidateFix-AzLogAnalyticsTableSchemaColumnNames -Data $DataVariable -Verbose:$Verbose

# Aligning data structure with schema (requirement for DCR)

$DataVariable = Build-DataArrayToAlignWithSchema -Data $DataVariable -Verbose:$Verbose

Phase 4/4 – Data Out (send to LogAnalytics) – combined functions

#-------------------------------------------------------------------------------------------

# Create/Update Schema for LogAnalytics Table & Data Collection Rule schema

#-------------------------------------------------------------------------------------------

CheckCreateUpdate-TableDcr-Structure -AzLogWorkspaceResourceId $LogAnalyticsWorkspaceResourceId `

-SchemaMode Merge `

-AzAppId $LogIngestAppId -AzAppSecret $LogIngestAppSecret -TenantId $TenantId -Verbose:$Verbose `

-DceName $DceName -DcrName $DcrName -TableName $TableName -Data $DataVariable `

-LogIngestServicePricipleObjectId $AzDcrLogIngestServicePrincipalObjectId `

-AzDcrSetLogIngestApiAppPermissionsDcrLevel $AzDcrSetLogIngestApiAppPermissionsDcrLevel `

-AzLogDcrTableCreateFromAnyMachine $AzLogDcrTableCreateFromAnyMachine `

-AzLogDcrTableCreateFromReferenceMachine $AzLogDcrTableCreateFromReferenceMachine

#-----------------------------------------------------------------------------------------------

# Upload data to LogAnalytics using DCR / DCE / Log Ingestion API

#-----------------------------------------------------------------------------------------------

Post-AzLogAnalyticsLogIngestCustomLogDcrDce-Output -DceName $DceName -DcrName $DcrName -Data $DataVariable -TableName $TableName `

-AzAppId $LogIngestAppId -AzAppSecret $LogIngestAppSecret -TenantId $TenantId -Verbose:$Verbose

TIP: error 513 – entity is too large

By default, AzLogDcrIngestPS POST-function will send the data in batches depending on an calculated average size per record. In case your recordset (rows) contains data of very different size, you might receive an error 513.

Cause is that you are hitting the limitation of 1 mb for each upload (Azure Pipeline limitation). Microsoft wants to receive many smaller chunks of data, as this is a shared environment. I have seen this issue when retrieving the list of all installed applications. Apparently the applications are storing information of very different degree of size.

You can mitigate this issue, by adding the parameter -BatchAmount to the Post-command. If you want to be sure, set it to 1

Post-AzLogAnalyticsLogIngestCustomLogDcrDce-Output -DceName $DceName `

-DcrName $DcrName `

-Data $DataVariable `

-TableName $TableName `

-AzAppId $LogIngestAppId `

-AzAppSecret $LogIngestAppSecret `

-TenantId $TenantId `

-BatchAmount 1 `

-Verbose:$VerbosePhase 4/4 “under the hood” – Data Out (send to LogAnalytics)

#-----------------------------------------------------------------------------------------------

# Check if table and DCR exist - or schema must be updated due to source object schema changes

#-----------------------------------------------------------------------------------------------

# Get insight about the schema structure

$Schema = Get-ObjectSchemaAsArray -Data $Data

$StructureCheck = Get-AzLogAnalyticsTableAzDataCollectionRuleStatus `

-AzLogWorkspaceResourceId $AzLogWorkspaceResourceId `

-TableName $TableName `

-DcrName $DcrName `

-SchemaSourceObject $Schema `

-AzAppId $AzAppId `

-AzAppSecret $AzAppSecret `

-TenantId $TenantId `

-Verbose:$Verbose

#-----------------------------------------------------------------------------------------------

# Structure check = $true -> Create/update table & DCR with necessary schema

#-----------------------------------------------------------------------------------------------

# build schema to be used for LogAnalytics Table

$Schema = Get-ObjectSchemaAsHash -Data $Data `

-ReturnType Table `

-Verbose:$Verbose

CreateUpdate-AzLogAnalyticsCustomLogTableDcr -AzLogWorkspaceResourceId $AzLogWorkspaceResourceId `

-SchemaSourceObject $Schema `

-SchemaMode $SchemaMode

-TableName $TableName `

-AzAppId $AzAppId `

-AzAppSecret $AzAppSecret `

-TenantId $TenantId `

-Verbose:$Verbose

# build schema to be used for DCR

$Schema = Get-ObjectSchemaAsHash -Data $Data -ReturnType DCR

CreateUpdate-AzDataCollectionRuleLogIngestCustomLog -AzLogWorkspaceResourceId $AzLogWorkspaceResourceId `

-SchemaSourceObject $Schema `

-SchemaMode $SchemaMode

-DceName $DceName `

-DcrName $DcrName `

-TableName $TableName `

-LogIngestServicePricipleObjectId $LogIngestServicePricipleObjectId `

-AzDcrSetLogIngestApiAppPermissionsDcrLevel $AzDcrSetLogIngestApiAppPermissionsDcrLevel `

-AzAppId $AzAppId `

-AzAppSecret $AzAppSecret `

-TenantId $TenantId `

-Verbose:$Verbose

$AzDcrDceDetails = Get-AzDcrDceDetails -DcrName $DcrName `

-DceName $DceName `

-AzAppId $AzAppId `

-AzAppSecret $AzAppSecret `

-TenantId $TenantId `

-Verbose:$Verbose

Post-AzLogAnalyticsLogIngestCustomLogDcrDce -DceUri $AzDcrDceDetails[2] `

-DcrImmutableId $AzDcrDceDetails[6] `

-TableName $TableName `

-DcrStream $AzDcrDceDetails[7] `

-Data $Data `

-BatchAmount $BatchAmount `

-AzAppId $AzAppId `

-AzAppSecret $AzAppSecret `

-TenantId $TenantId `

-Verbose:$Verbose

How can I modify the schema of LogAnalytics table & Data Collection Rule, when the source object schema changes ?

Both the DCR and LogAnalytics table has a schema, which needs to be matching the schema of the source object. This is handled by using functions in AzLogDcrIngestPS module.

It is fully supported by AzLogDcringestPS to automatically modify the schema, if it detects changes. It is managed by a variable (AzLogDcrTableCreateFromAnyMachine).

Video 1m 40s – Automatic creation of 2 tables & DCRs (verbose mode)

Video 1m 37s – Automatic creation of 2 tables & DCRs (normal mode)

Video 1m 34s – See schema of DCR and table)

I recommend schema changes to be managed by you – and not happen automatically.

AzLogDcrIngestPS supports 2 modes for managing the schema: Merge and Overwrite

SchemaMode = Merge (default)

If you set SchemaMode = Merge, then new properties from the source object will be added (merged) into the current schema of the log analytics. DCR will import the schema from log analytics table to ensure they are identically.

Default mode is Merge, if you don’t define the variable SchemaMode on the functions: CheckCreateUpdate-TableDr-Structure CreateUpdate-AzLogAnalyticsCustomLogTableDcr CreateUpdate-AzDataCollectionRuleLogIngestCustomLog

SchemaMode = Overwrite

If you set SchemaMode = Overwrite, then the schema in DCR and table will be overwritten (updated) – based on the source object schema.

Recommended function to understand schema

I recommend to use the following function to understand the schema of the data source:

Get-ObjectSchemaAsArray -Data $DataVariable -Verbose:$verboseInternal error 500 mitigation (very rare !)

In most cases, the changes of the schema of tables and DCRs will be done using a PUT (overwrite) command, which will add new properties to the table & DCR.

Right now, LogAnalytics will throw an error ‘internal server error 500’, if there is a change of the schema-type of an existing property.. This is very rare, but I have seen it happen for example a string-value was changed from TRUE to a boolean ($true)

AzLogDcrIngestPS will fix this by deleting the table and re-creating it. You will not loose any data, as they are kept in the database – and you will see them again, when the table and properties are re-created.

Problem has been escalated to the Azure LogAnalytics team and will be fixed.

Details about functions

Category: Data Manipulate

| Function name | Synopsis |

|---|---|

| Add-CollectionTimeToAllEntriesInArray | Add property CollectionTime (based on current time) to all entries on the object |

| Add-ColumnDataToAllEntriesInArray | Adds up to 3 extra columns and data to the object |

| Build-DataArrayToAlignWithSchema | Rebuilds the source object to match modified schema structure – used after usage of ValidateFix-AzLogAnalyticsTableSchemaColumnNames |

| Convert-CimArrayToObjectFixStructure | Converts CIM array and remove CIM class information |

| Convert-PSArrayToObjectFixStructure | Converts PS array and remove PS class information |

| Filter-ObjectExcludeProperty | Removes columns from the object which is considered “noice” and shouldn’t be send to logs |

| ValidateFix-AzLogAnalyticsTableSchemaColumnNames | Validates the column names in the schema are valid according the requirement for LogAnalytics tables. Fixes any issues by rebuild the source object |

Category: Table/DCR/Schema/Transformation management

| Function name | Synopsis |

|---|---|

| Get-AzLogAnalyticsTableAzDataCollectionRuleStatus | Get status about Azure Loganalytics tables and Data Collection Rule |

| Get-AzDcrDceDetails | Retrieves information about data collection rules and data collection endpoints – using Azure Resource Graph |

| Get-AzDataCollectionRuleTransformKql | Retrieves current data transformation in DCR (if found) |

| CheckCreateUpdate-TableDcr-Structure | Create or Update Azure Data Collection Rule (DCR) used for log ingestion to Azure LogAnalytics using Log Ingestion API (combined) |

| CreateUpdate-AzDataCollectionRuleLogIngestCustomLog | Create or Update Azure Data Collection Rule (DCR) used for log ingestion to Azure LogAnalytics using Log Ingestion API |

| CreateUpdate-AzLogAnalyticsCustomLogTableDcr | Create or Update Azure LogAnalytics Custom Log table – used together with Data Collection Rules (DCR) for Log Ingestion API upload to LogAnalytics |

| Get-ObjectSchemaAsArray | Gets the schema of the object as array with column-names and their type (strin, boolean, dynamic, etc.) |

| Get-ObjectSchemaAsHash | Gets the schema of the object as hash table with column-names and their type (strin, boolean, dynamic, etc.) |

| Delete-AzDataCollectionRules | Deletes the Azure Loganalytics defined in like-format, so you can fast clean-up for example after demo or testing |

| Delete-AzLogAnalyticsCustomLogTables | Deletes the Azure Loganalytics defined in like-format, so you can fast clean-up for example after demo or testing |

| Update-AzDataCollectionRuleDceEndpoint | Updates the DceEndpointUri of the Data Collection Rule |

| Update-AzDataCollectionRuleResetTransformKqlDefault | Updates the tranformKql parameter on an existing DCR – and resets it back to default |

| Update-AzDataCollectionRuleTransformKql | Updates the tranformKql parameter on an existing DCR with the provided parameter |

Category: Data Out (upload to Azure LogAnalytics)

| Function name | Synopsis |

|---|---|

| Post-AzLogAnalyticsLogIngestCustomLogDcrDce | Send data to LogAnalytics using Log Ingestion API and Data Collection Rule |

| Post-AzLogAnalyticsLogIngestCustomLogDcrDce-Output | Send data to LogAnalytics using Log Ingestion API and Data Collection Rule (combined) |

| Get-AzDceListAll | Builds list of all Data Collection Endpoints (DCEs), which can be retrieved by Azure using the RBAC context of the Log Ingestion App |

| Get-AzDcrListAll | Builds list of all Data Collection Rules (DCRs), which can be retrieved by Azure using the RBAC context of the Log Ingestion App |

Category: Support functions (security)

| Function name | Synopsis |

|---|---|

| Get-AzAccessTokenManagement | Get access token for connecting management.azure.com – used for REST API connectivity |

2 thoughts on ““AnyConnector” AzLogDcrIngestPS – your helper to send data via Azure Pipeline, Azure Log Ingestion API & Azure Data Collection Rules into Azure LogAnalytics table”